Слайд 1Taurida National V. I. Vernadsky University , e-Lecture , March

18, 2014

Association Measures

Discovering idioms in a sea of words

Jorge

Baptista

University of Algarve, Faro, Portugal

Spoken Language Lab, INESC ID Lisboa, Portugal

Слайд 2a definition of idiom

multiword expression: composed of two or more

words

meaning of the overall sequence can not be calculated from

the individual meaning of the component words when used separately

this difference in meaning is related to different formal properties :

combinatorial, morphological, ...

different types of idioms may show different properties

Слайд 3formal types of idioms

noun phrases

noun + adjective (in English adjective+noun)

:

round table, yellow fever, good practices,

noun + preposition

+ noun:

Portuguese man o' war (Physalia physalis),

prepositional phrases :

in a rush, in the spur of the moment, by accident, on purpose

verb + complement : kick the bucket, hold Poss0 tongue,

Слайд 4Dictionaries

are always incomplete...

new words are created every day, to

coin new concepts, ideas, tools, methods, objects...

old words (from every

age) are reshaped either in form or in use

to function as new words, mostly by smoothly changing their environments

no dictionary can ever be up-to-date

but for computer natural language processing NLP,

the lexicon is key to the performance of many tasks

so: we need ways of acquiring lexicon (semi-)automatically,

help lexicographer describe their rapidly evolving object

build resources for NLP

Слайд 5Corpus linguistics

The low cost and easy availability of large-sized corpus

(several hundred million words) makes it possible to study linguistic

phenomena such as idioms, including their lexical acquisition for lexicographic/lexicological purposes

one requires appropriate tools for corpora processing, basically, counting words and words’ combinations

more sophisticated processing (POS taggers and parsers) can help improve the results for certain types of idioms (or are essential to some of them)

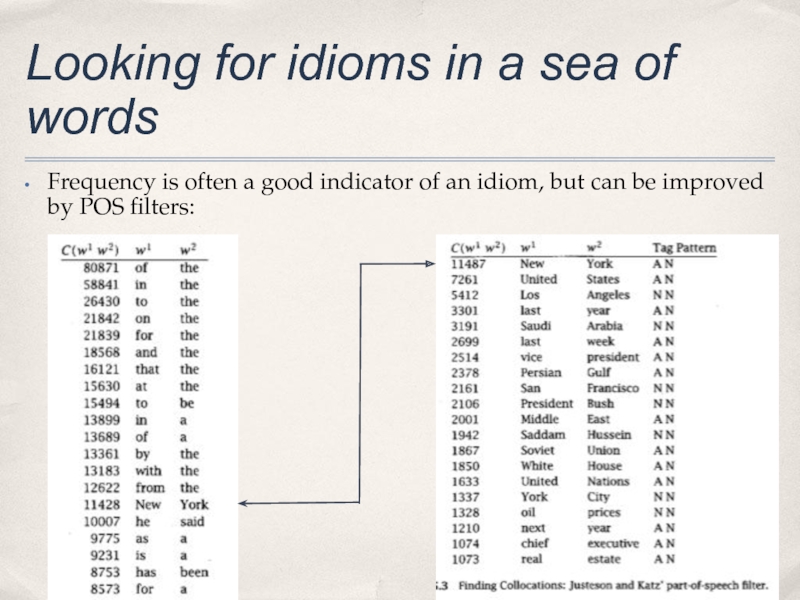

Слайд 6Looking for idioms in a sea of words

Frequency is often

a good indicator of an idiom, but can be improved

by POS filters:

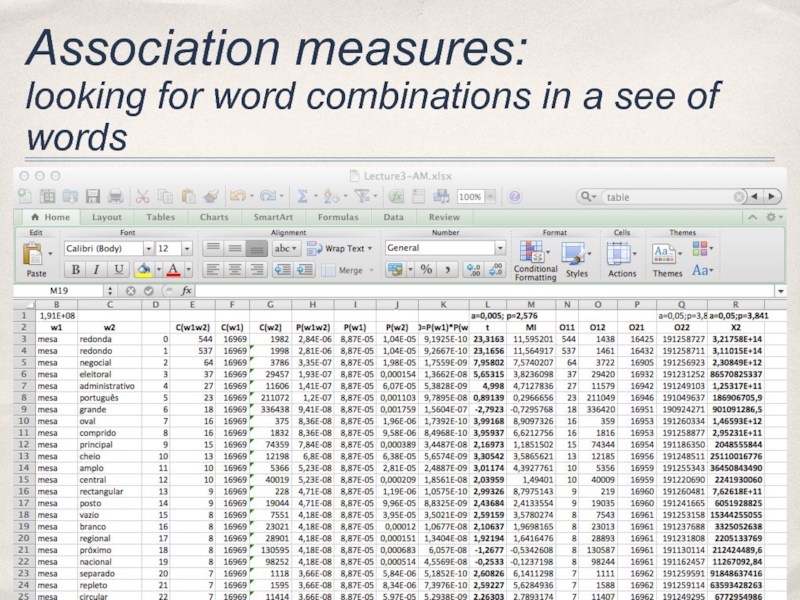

Слайд 7Association measures:

looking for word combinations in a see of

words

Association Measures (AM) are statistical methods

that can help linguists’

work in finding statistically relevant

word combinations that may/should be included in the lexicon

as idioms, collocations, etc.

most important AMs for our task are based

on the concept of hypothesis testing :

Student’s t-test (t)

chi-quare (Χ2)

another important measure, based on information theory is

the (pointwise) Mutual Information (PMI or MI)

in the case of relative position of words in word combinations,

the Dice coefficient (D) is also important (eventually, language dependent)

Слайд 9Hypothesis testing

It is a simple statistical concept, that can be

formulated as a question:

Given a pair of events, how likely

is it that they co-occurred by some reason or that it was chance alone?

In our case, let’s think of ‘events’ as words and ‘pair of events’ as word combinations, as in the case of idioms

‘likelihood’ involves the concept of probability

the concept of ‘chance’ is linked to the hypothesis testing setting, where one of the hypothesis is the null hypothesis, where two events co-occur by mere chance

CAVEAT: finding that there must be a reason (and not just mere chance) for a pair of events, does not mean that there is a causal relation between them (problem of the tertium aliquid)

Слайд 10Hypothesis testing (continued)

The strategy of hypothesis testing:

If two events co-occur

by chance alone: null hypothesis

Else, some cause links the events

Though

the cause may be elusive, we can discard the null hypothesis and say that at least that two events did not occur by chance

Слайд 11Hypothesis testing (continued)

Defining a null hypothesis (H0):

a pair of words,

or bigram (w1w2), is a chance event if the probability

of its occurring is significantly less than its actual number of occurrences

What is the probability of a word? or of a bigram?

P(w1) = Count(w1)/N

P(w1) = Count(w2)/N

P(w1w2) = Count(w1w2)/N

where N is the total number of words in a given corpus

Слайд 12Hypothesis testing (continued)

So, how do we calculate H0 , given

a bigram w1w2 ?

H0

= P(w1)*P(w2)

=

C(w1)/N * C(w2)/N

Then what?

Then we need a test of statistical relevance to compare H0 with the actual probability of the bigram P(w1w2).

To do this, we introduce the Student t-test

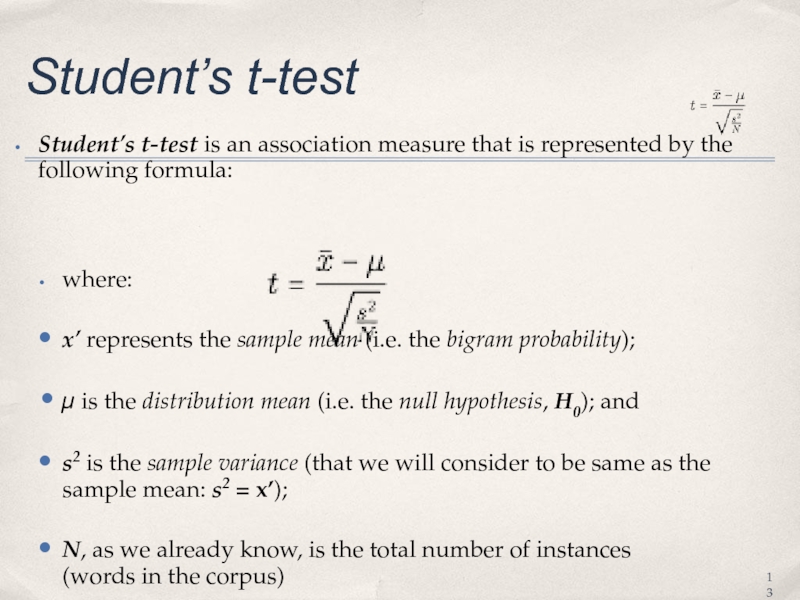

Слайд 13Student’s t-test

Student’s t-test is an association measure that is represented

by the following formula:

where:

x’ represents the sample mean (i.e. the

bigram probability);

μ is the distribution mean (i.e. the null hypothesis, H0); and

s2 is the sample variance (that we will consider to be same as the sample mean: s2 = x’);

N, as we already know, is the total number of instances

(words in the corpus)

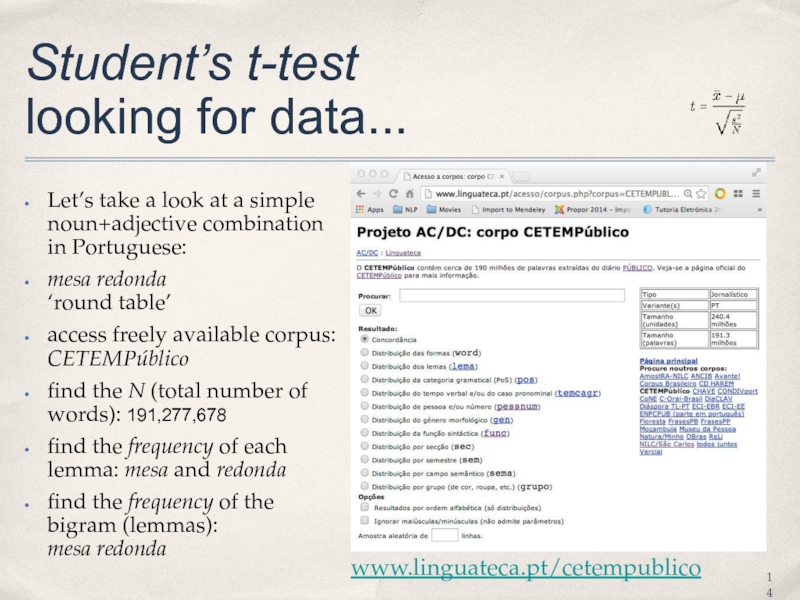

Слайд 14Student’s t-test

looking for data...

Let’s take a look at a

simple noun+adjective combination in Portuguese:

mesa redonda

‘round table’

access freely available corpus:

CETEMPúblico

find the N (total number of words): 191,277,678

find the frequency of each lemma: mesa and redonda

find the frequency of the bigram (lemmas):

mesa redonda

www.linguateca.pt/cetempublico

Слайд 15Student’s t-test

an example

Now we have all the data we

need:

Count(mesa) ‘table’: 16,969

Count(redondo) ‘round’: 1,982

Count(mesa redonda) ‘round table’: 544

Total number

of words, N: 191,277,678 (approx. 191,3 M words)

Let’s make the calculation of the probabilities for w1, w2 and w1w2:

P(mesa) ‘table’: 16,969/191,277,678

= 0,00008871395856 ≈ 8,87e-5

P(redondo) ‘round’: 1,982/191,277,678

= 0,0000103618991 ≈ 1,04e-5

P(mesa redonda) ‘round table’: 544/91,277,678

= 0,00000284403285 ≈ 0,28e-5

Слайд 16Student’s t-test

an example

Based on the previous calculation,

we can

now determine the null hypothesis (H0)

H0 = p(w1)*p(w2) = 8,87e-5

* 1,04e-5 = 9,19e-10 ≈ 0,0000919e-5

Finally, we apply the t-test formula:

t = (x’-n)/√(x’/N)

= (0,28e-5 - 0,0000919e-5)/√(0,28e-5/191,277,678)

= 0,2799077e-5/0,0000001 = 23,31626888

Слайд 17Student’s t-test

an example

Now, we look in a t-test relevance

threshold table:

The degree of certainty/relevance (usually represented by α ‘alpha’)

in NLP is α=0,005, because we deal with large datasets,

while in the human/social sciences is usually higher, α=0,05;

The threshold is usually represented by p.

For a relevance a=0,005, the t-test threshold is p=2,576

if t < p, then we are 99,5% sure that we can not discard the null hypothesis, and the combination is due to chance

if t > p, then we are 99,5% sure that we can discard the null hypothesis and the combination is not due to chance

Слайд 18Student’s t-test

an example

SO, in our example, since t(mesa redonda)

= 27,99077

hence, t > p,

therefore, we can reject the null

hypothesis and say that this word combination is not due to chance

Notice that the linguistic status (a compound noun) can not be determined, just that the combination is not random.

Слайд 19Student’s t-test

conclusion

The Student t-test is an easy way to

determine if two events (words) co-occur by chance alone, or

if their co-occurrence is statistically relevant

however, this AM ignores the marginal distribution of w1 and w2, that is, the cases when they do not co-occur.

A more sophisticated AM is the chi-square (X2)

Слайд 20Chi-square (X2)

The Student t-test assumes a normal distribution of the

probabilities of the events, while the Chi-square does not

compares the

observed frequences of the events with the expected frequences if the events were independent

if the difference is larger than the statistical relevance threshold,

then we can reject the null hypothesis of independence of events

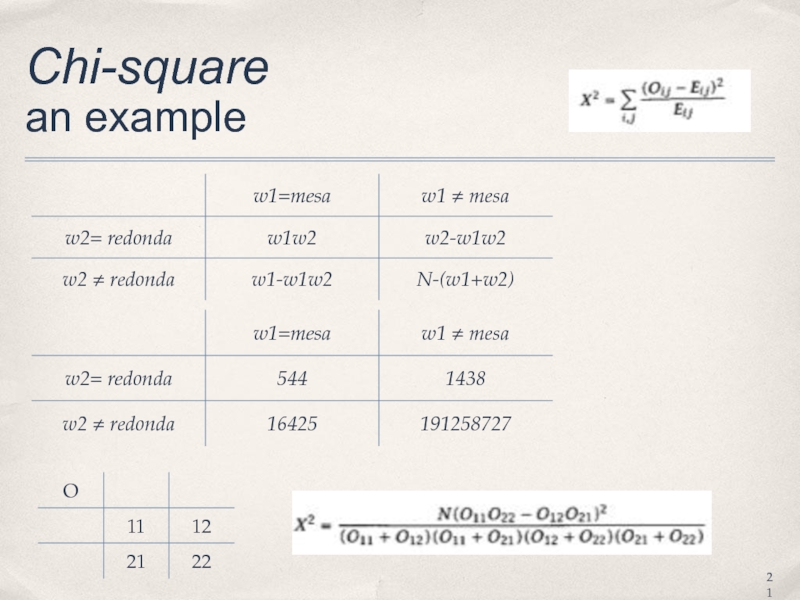

Слайд 22Chi-square

an example

An easier way to do these calculations is to

use a spreadsheet:

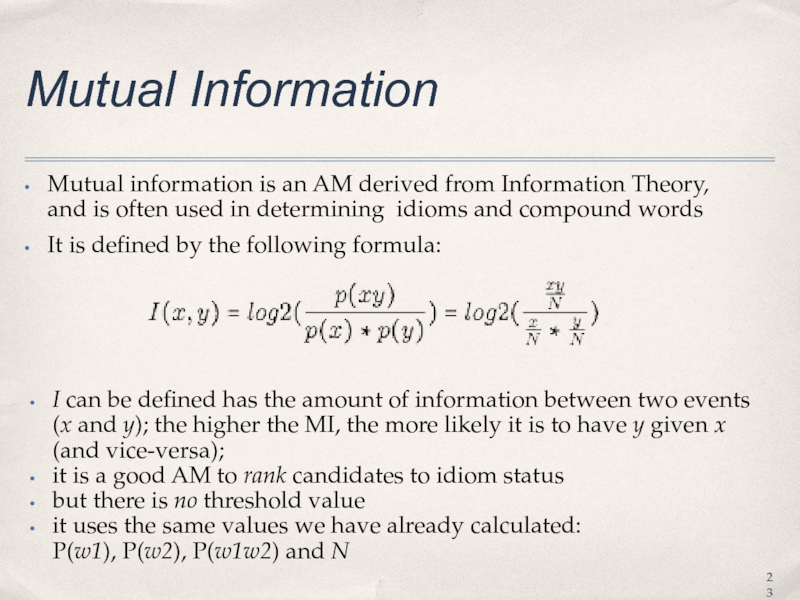

Слайд 23Mutual Information

Mutual information is an AM derived from Information Theory,

and is often used in determining idioms and compound words

It

is defined by the following formula:

I can be defined has the amount of information between two events (x and y); the higher the MI, the more likely it is to have y given x (and vice-versa);

it is a good AM to rank candidates to idiom status

but there is no threshold value

it uses the same values we have already calculated:

P(w1), P(w2), P(w1w2) and N

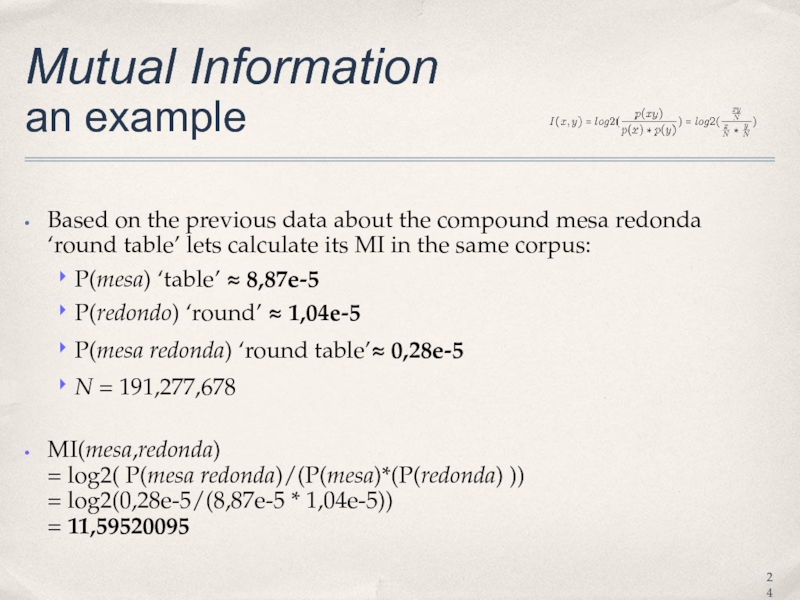

Слайд 24Mutual Information

an example

Based on the previous data about the compound

mesa redonda ‘round table’ lets calculate its MI in the

same corpus:

P(mesa) ‘table’ ≈ 8,87e-5

P(redondo) ‘round’ ≈ 1,04e-5

P(mesa redonda) ‘round table’≈ 0,28e-5

N = 191,277,678

MI(mesa,redonda)

= log2( P(mesa redonda)/(P(mesa)*(P(redonda) ))

= log2(0,28e-5/(8,87e-5 * 1,04e-5))

= 11,59520095

Слайд 25Association measures:

looking for word combinations in a see of

words

Слайд 26Association measures:

looking for word combinations in a see of

words

other examples:

{V,Adv} combinations : marry civilly

{V, Ci} idioms :

eat dirt

...

navegar é preciso/viver não é preciso

(Fernando Pessoa, 1888-1935)

Слайд 27references

Church, K & Hanks, P. (1990). Word association norms, mutual

information, and lexicography. Computational Linguistics, 16(1):22–29.

Manning, C. & H.

Schutze (1999). Foundations of Statistical Natural Language Processing. Cambridge, Massachussets.

Pecina, P. & P. Schlesinger (2006). Combining Association Measures for Collocation Extraction. In Proceedings of ACL 2006, pp. 652.