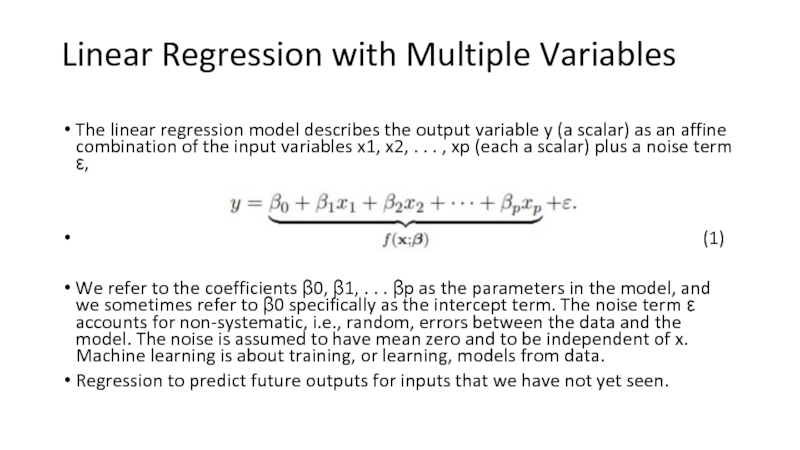

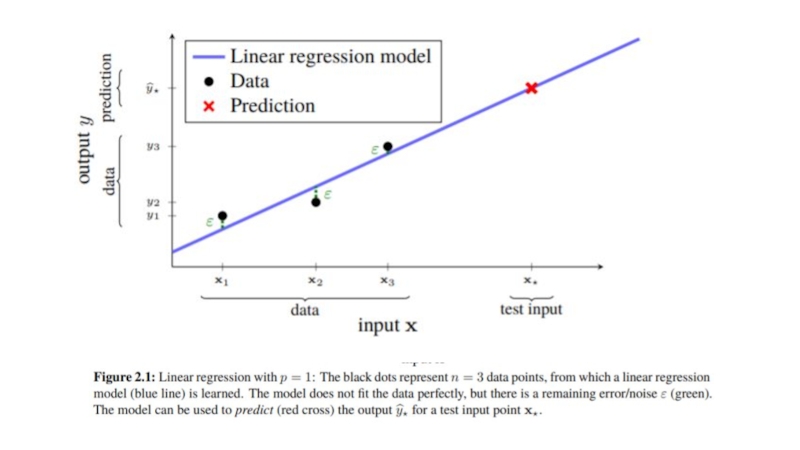

output variable y (a scalar) as an affine combination of

the input variables x1, x2, . . . , xp (each a scalar) plus a noise term ε,(1)

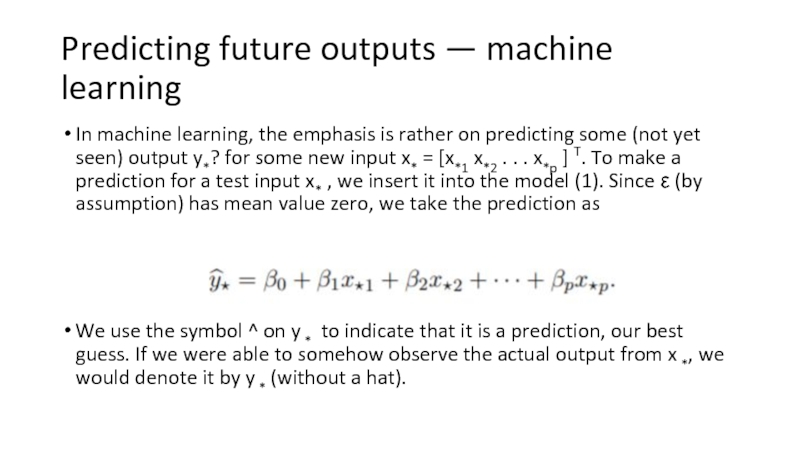

We refer to the coefficients β0, β1, . . . βp as the parameters in the model, and we sometimes refer to β0 specifically as the intercept term. The noise term ε accounts for non-systematic, i.e., random, errors between the data and the model. The noise is assumed to have mean zero and to be independent of x. Machine learning is about training, or learning, models from data.

Regression to predict future outputs for inputs that we have not yet seen.