Разделы презентаций

- Разное

- Английский язык

- Астрономия

- Алгебра

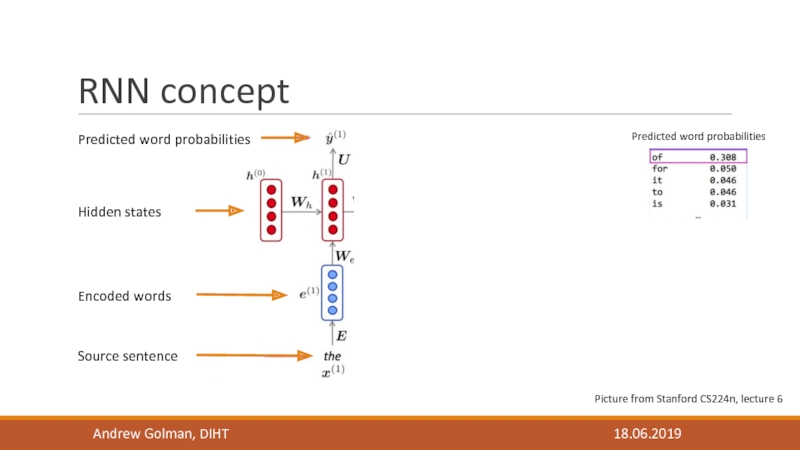

- Биология

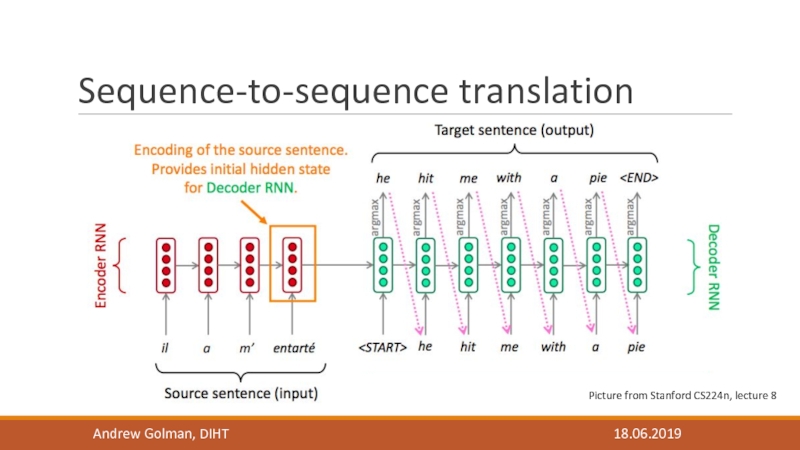

- География

- Геометрия

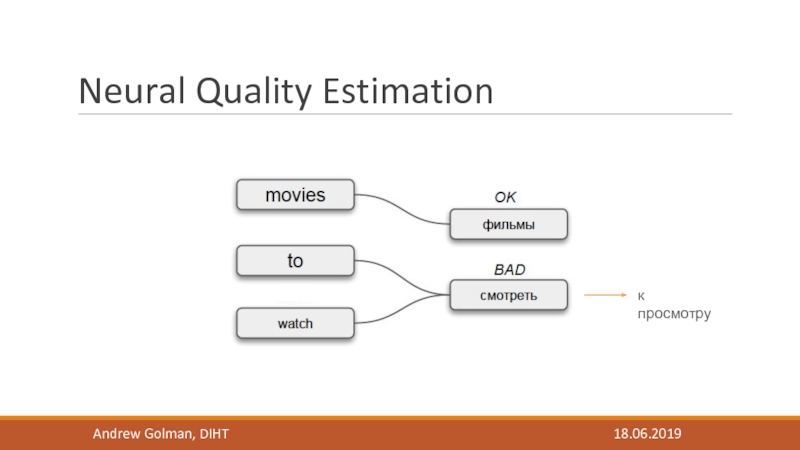

- Детские презентации

- Информатика

- История

- Литература

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Quality Estimation in Machine Translation

Содержание

- 1. Quality Estimation in Machine Translation

- 2. Plan Why do we need Neural Machine

- 3. 1990s – 2010s: Statistical Machine TranslationPictures from

- 4. 2014: Neural Machine Translation A way to

- 5. RNN conceptPredicted word probabilitiesSource sentencePredicted word probabilitiesAndrew Golman, DIHT18.06.2019Encoded wordsHidden statesPicture from Stanford CS224n, lecture 6

- 6. Sequence-to-sequence translationAndrew Golman, DIHT18.06.2019 Picture from Stanford CS224n, lecture 8

- 7. Evaluation Compare with baseline translations Pay assessors

- 8. Neural Quality EstimationAndrew Golman, DIHT18.06.2019к просмотру

- 9. Similar model for Quality Estimation First bidirectional

- 10. WMT task English-German, English-Spanish, English-Russian datasets 15,000

- 11. Our experimentsUse CRFs (lattice-structured RNNs) for final classificationTry transformer architectureResults from [3]Andrew Golman, DIHT18.06.2019

- 12. SummaryAndrew Golman, DIHT18.06.2019 NMT is more efficient

- 13. ReferencesGoogle’s Neural Machine Translation System: Bridging the

- 14. Thank You for Your attentionAndrew Golman, DIHT18.06.2019mailto:golman.as@phystech.edu

- 15. Скачать презентанцию

Plan Why do we need Neural Machine Translation? What are Seq2Seq models? Can we apply same techniques for Quality Estimation task? What have we achieved?Andrew Golman, DIHT18.06.2019

Слайды и текст этой презентации

Слайд 1Quality Estimation in Machine Translation

AndreY golman

Diht, 3rd year bachelor student

18.06.2019

Andrew

Golman, DIHT

Слайд 2Plan

Why do we need Neural Machine Translation?

What are

Seq2Seq models?

Can we apply same techniques for Quality Estimation

task?What have we achieved?

Andrew Golman, DIHT

18.06.2019

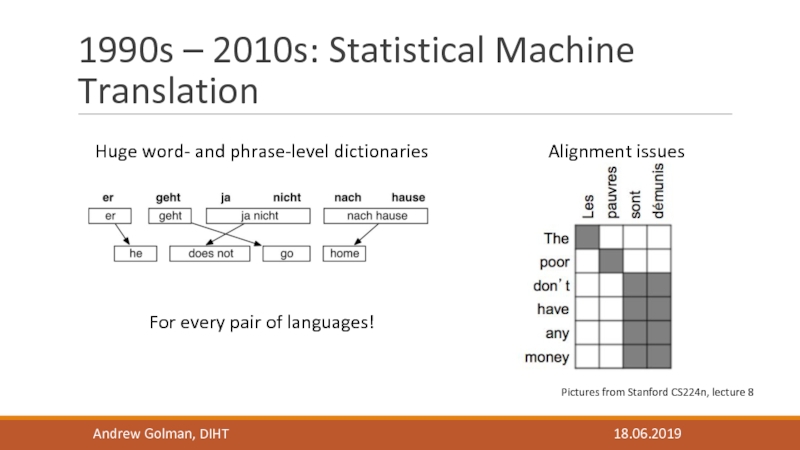

Слайд 31990s – 2010s: Statistical Machine Translation

Pictures from Stanford CS224n, lecture

8

Alignment issues

Huge word- and phrase-level dictionaries

For every pair of languages!

Andrew

Golman, DIHT18.06.2019

Слайд 42014: Neural Machine Translation

A way to do Machine Translation

with a single neural network

Most complex models have 200

million parametersMore fluent than SMT

Pick up the meaning first, then phrase it

Andrew Golman, DIHT

18.06.2019

Слайд 5RNN concept

Predicted word probabilities

Source sentence

Predicted word probabilities

Andrew Golman, DIHT

18.06.2019

Encoded words

Hidden

states

Picture from Stanford CS224n, lecture 6

Слайд 6Sequence-to-sequence translation

Andrew Golman, DIHT

18.06.2019

Picture from Stanford CS224n, lecture

Слайд 7Evaluation

Compare with baseline translations

Pay assessors for marking errors

Build a neural system for error detection

Andrew Golman, DIHT

18.06.2019

Слайд 9Similar model for Quality Estimation

First bidirectional LSTM

Second bidirectional

LSTM

OK/BAD labels

Error classification

Picture from [2]

Andrew Golman, DIHT

18.06.2019

Слайд 10WMT task

English-German, English-Spanish, English-Russian datasets

15,000 labelled sentences

Pretrained

models are allowed

Results from [2]

Andrew Golman, DIHT

18.06.2019

Слайд 11Our experiments

Use CRFs (lattice-structured RNNs) for final classification

Try transformer architecture

Results

from [3]

Andrew Golman, DIHT

18.06.2019

Слайд 12Summary

Andrew Golman, DIHT

18.06.2019

NMT is more efficient than SMT

RNNs

are used for text generation

First NMT systems were based

on two RNNsQuality estimation models can be used to compare translation systems

Results are improving every year

Слайд 13References

Google’s Neural Machine Translation System: Bridging the Gap between Human

and Machine Translation, Yonghui Wu, Mike Schuster, Zhifeng Chen, Quoc V.

Le, Mohammad Norouzi, 2016Predictor-estimator using multilevel task learning with stack propagation for neural quality estimation, Hyun Kim, Jong-Hyeok Lee and Seung-Hoon Na, 2017

Comparison of Various Architectures for World-Level Quality Estimation Mikhail Mosyagin, Amir Yagudin, Andrey Golman, 2019

Andrew Golman, DIHT

18.06.2019

![Quality Estimation in Machine Translation Similar model for Quality Estimation First bidirectional LSTM Second bidirectional LSTMOK/BAD Similar model for Quality Estimation First bidirectional LSTM Second bidirectional LSTMOK/BAD labelsError classificationPicture from [2]Andrew Golman, DIHT18.06.2019](/img/thumbs/4b9058271ef1e1a1ad6c8d2c8cc87034-800x.jpg)

![Quality Estimation in Machine Translation WMT task English-German, English-Spanish, English-Russian datasets 15,000 labelled sentences Pretrained models WMT task English-German, English-Spanish, English-Russian datasets 15,000 labelled sentences Pretrained models are allowedResults from [2]Andrew Golman, DIHT18.06.2019](/img/thumbs/273689a39b2b597b2fffa16fd4eb3605-800x.jpg)

![Quality Estimation in Machine Translation Our experimentsUse CRFs (lattice-structured RNNs) for final classificationTry transformer architectureResults from [3]Andrew Golman, DIHT18.06.2019 Our experimentsUse CRFs (lattice-structured RNNs) for final classificationTry transformer architectureResults from [3]Andrew Golman, DIHT18.06.2019](/img/thumbs/ee5a748e16847fc7ba2adfdfc028910e-800x.jpg)