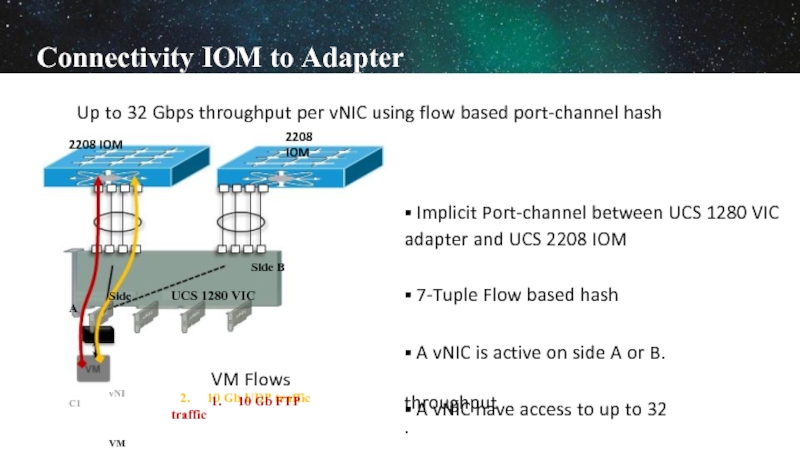

32 Gbps

HW Capable of 256 PCIe devices

•

OS restriction apply

PCIe virtualizationOS

independent(same as M81KR)

Single OS driver image for both M81KR and 1280 VIC

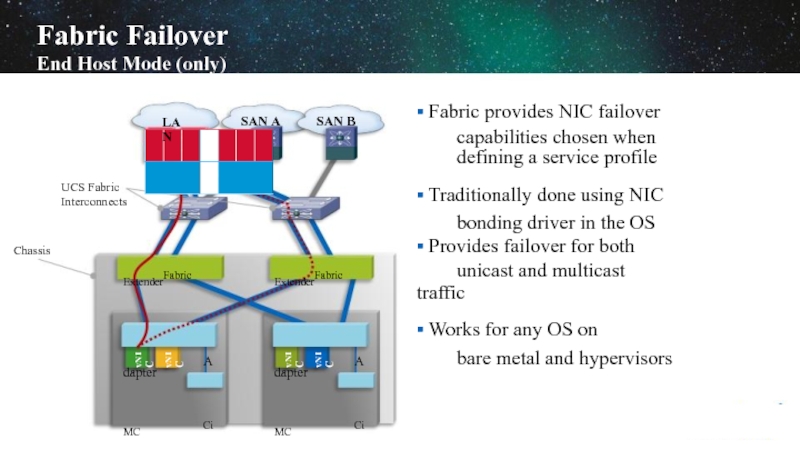

FabricFailover supported

Eth hash inputs : Source MAC Address, DestinationMAC Address,

Source Pprt, DestinationPort,Source IP address, Destination,P

address and VLAN

FC Hash inputs: Source MAC Address

DestinationMAC Address, FC SID and FC DID

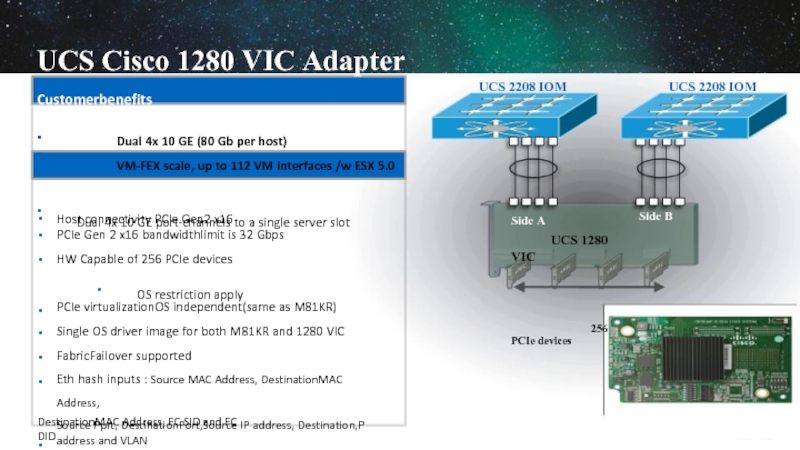

UCS Cisco 1280 VIC Adapter

Customerbenefits

Dual 4x 10 GE (80 Gb per host)

VM-FEX scale, up to 112 VM interfaces /w ESX 5.0

Featuredetails

•

Dual 4x 10 GE port-channels to a single server slot

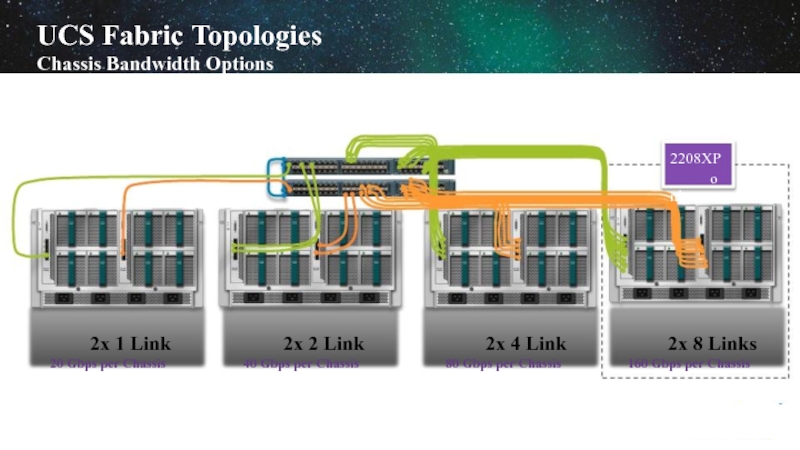

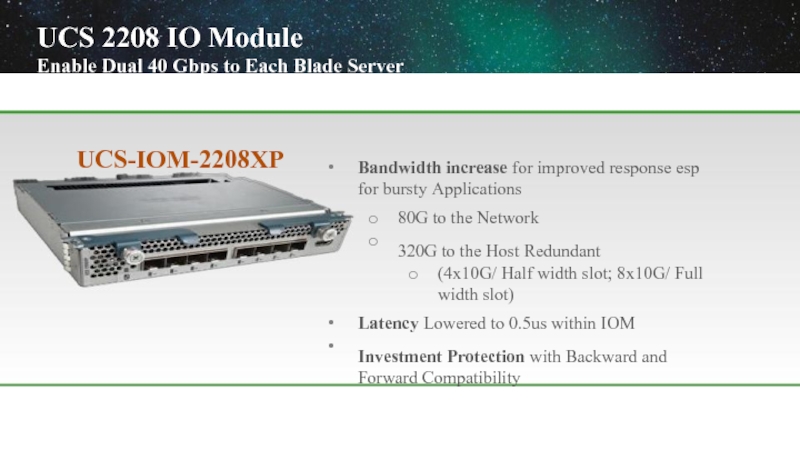

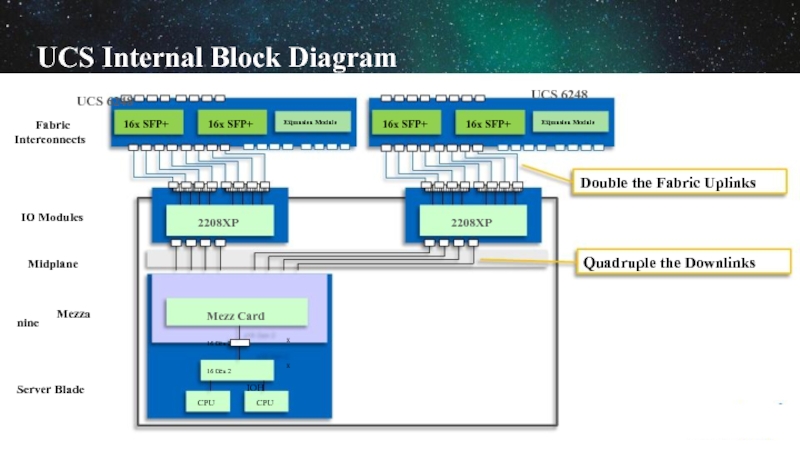

UCS 2208 IOM

Side B

Side A

UCS 1280 VIC

256 PCIe devices

UCS 2208 IOM