Разделы презентаций

- Разное

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Геометрия

- Детские презентации

- Информатика

- История

- Литература

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

1 CS 525 Advanced Distributed Systems Spring 2011 Indranil Gupta

Содержание

- 1. 1 CS 525 Advanced Distributed Systems Spring 2011 Indranil Gupta

- 2. Target SettingsProcess ‘group’-based systemsClouds/Datacenters Replicated serversDistributed databasesCrash-stop/Fail-stop process failures

- 3. Group Membership ServiceApplication Queries e.g.,

- 4. Two sub-protocolsApplication Process piGroup Membership ListUnreliable CommunicationAlmost-Complete

- 5. Large Group: Scalability A Goalthis is us (pi)1000’s of processesProcess Group“Members”

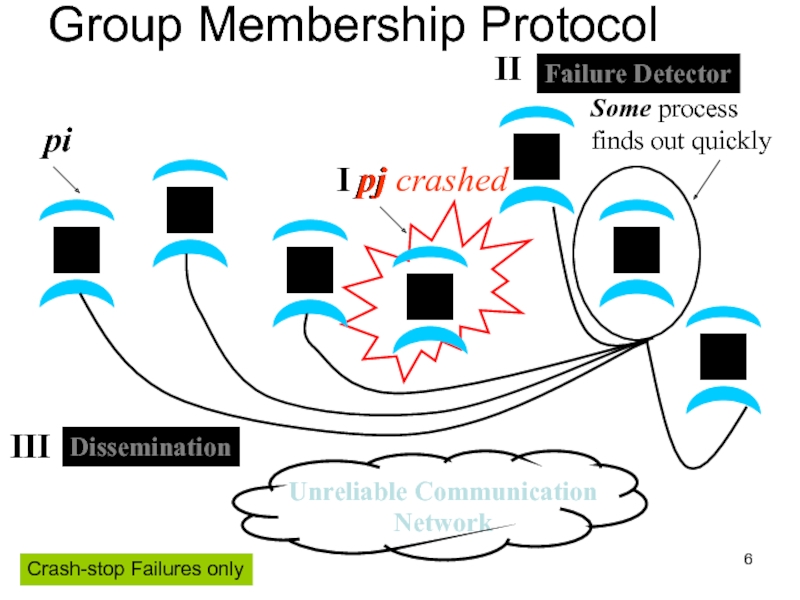

- 6. pjGroup Membership ProtocolCrash-stop Failures only

- 7. I. pj crashes Nothing we can do

- 8. II. Distributed Failure Detectors: Desirable PropertiesCompleteness =

- 9. Distributed Failure Detectors: PropertiesCompletenessAccuracySpeedTime to first detection of a failureScaleEqual Load on each memberNetwork Message Load

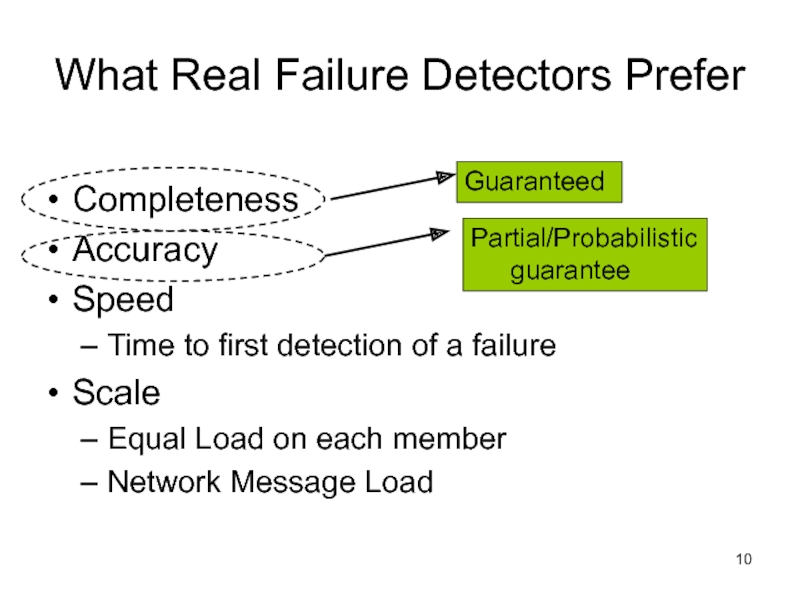

- 10. What Real Failure Detectors PreferCompletenessAccuracySpeedTime to first

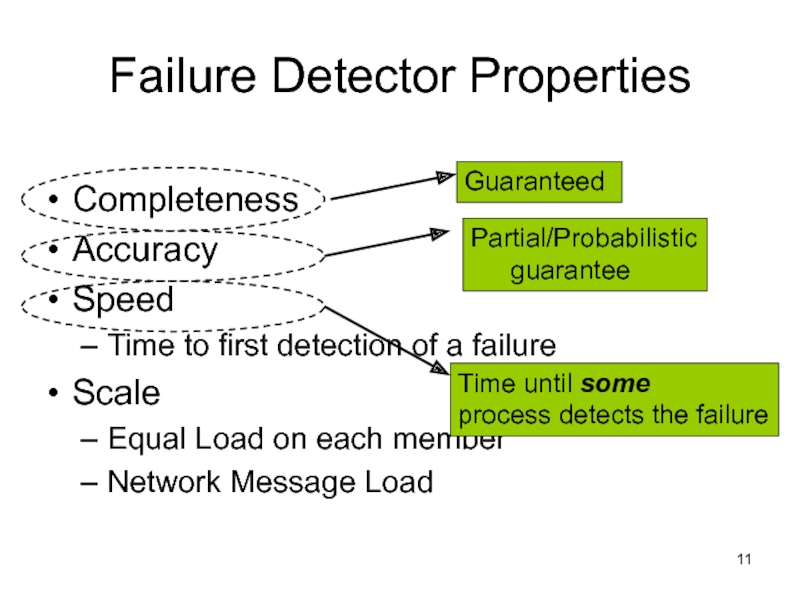

- 11. Failure Detector PropertiesCompletenessAccuracySpeedTime to first detection of

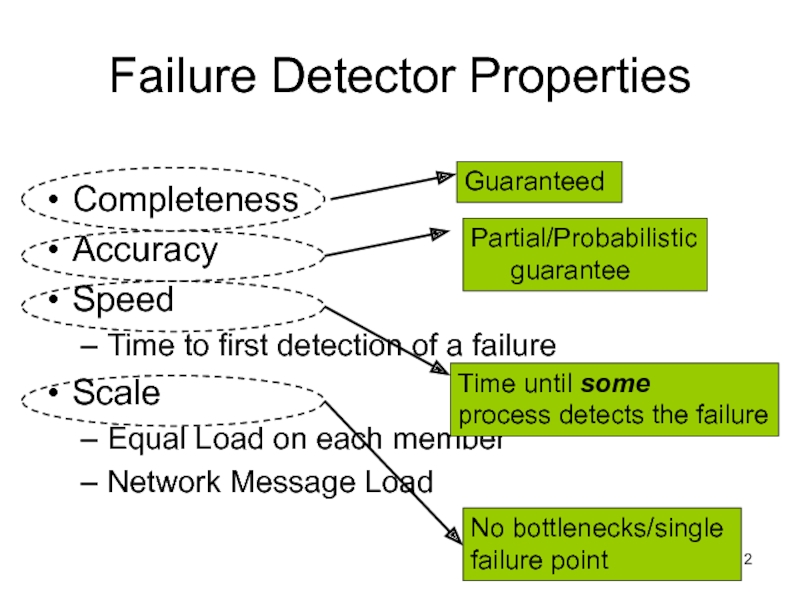

- 12. Failure Detector PropertiesCompletenessAccuracySpeedTime to first detection of

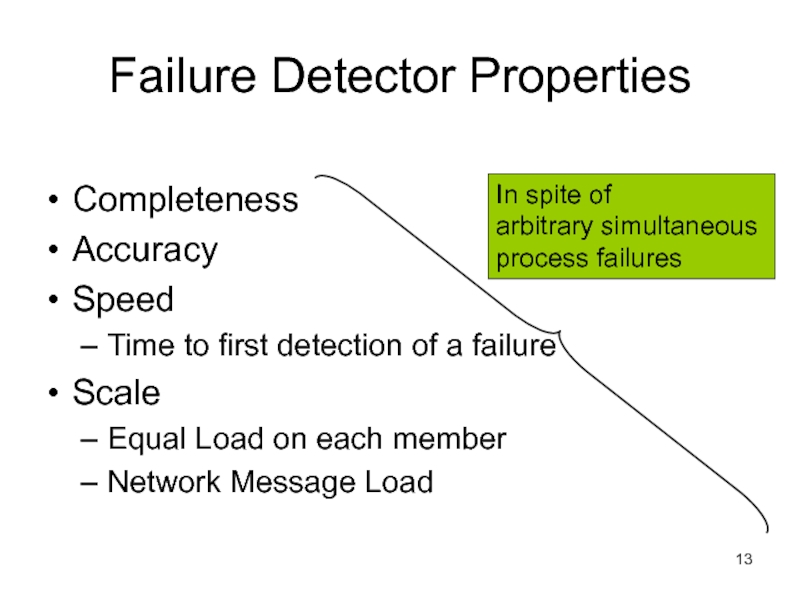

- 13. Failure Detector PropertiesCompletenessAccuracySpeedTime to first detection of

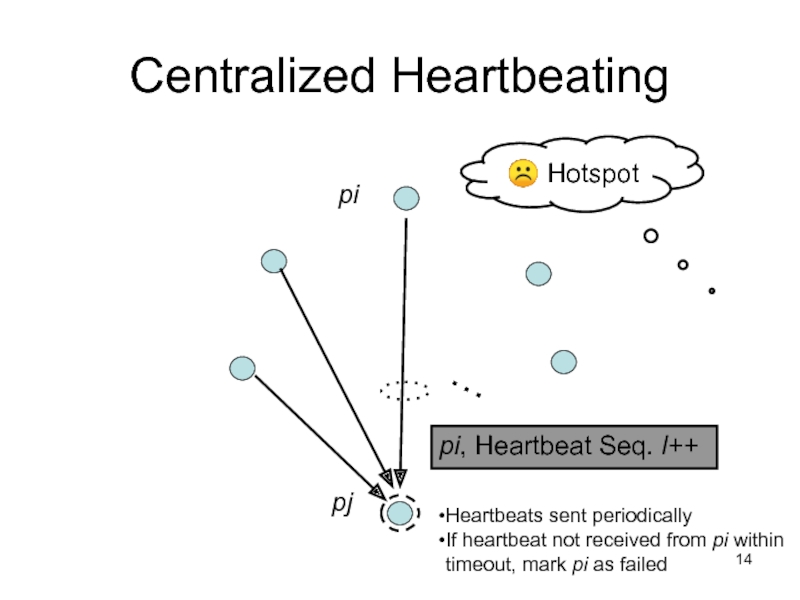

- 14. Centralized Heartbeating…pi, Heartbeat Seq. l++ pipjHeartbeats sent

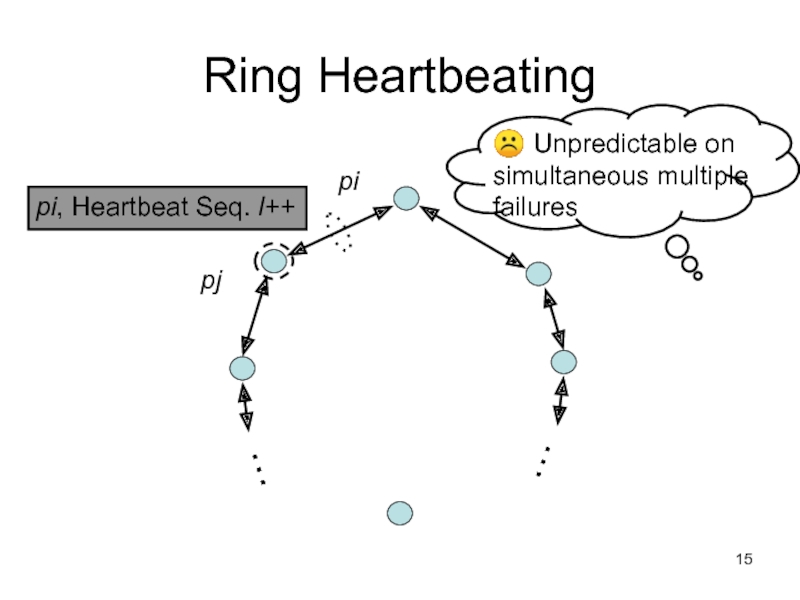

- 15. Ring Heartbeatingpi, Heartbeat Seq. l++pi……pj

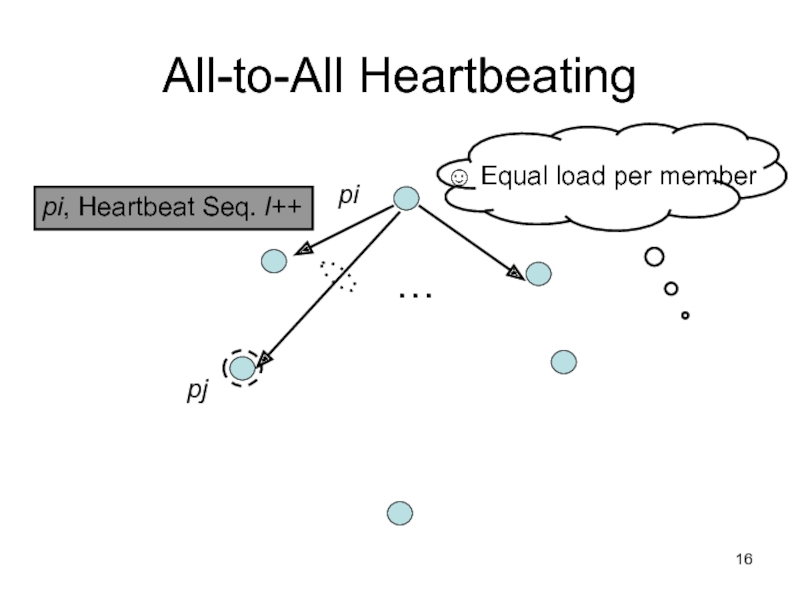

- 16. All-to-All Heartbeatingpi, Heartbeat Seq. l++…pipj

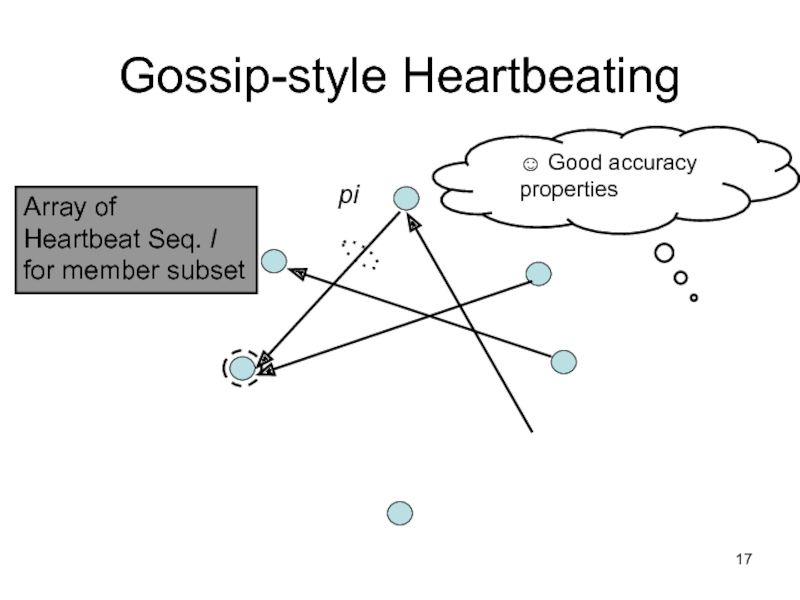

- 17. Gossip-style HeartbeatingArray of Heartbeat Seq. lfor member subsetpi

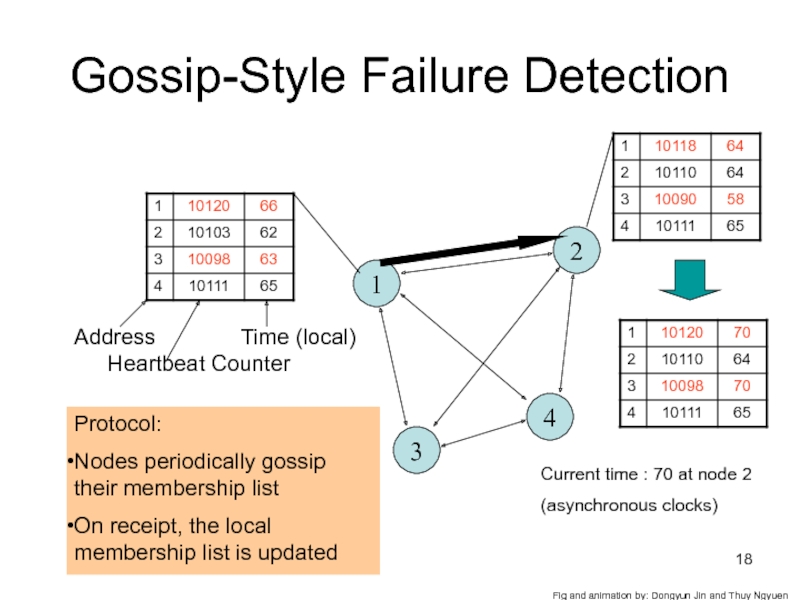

- 18. Gossip-Style Failure Detection1243Protocol: Nodes periodically gossip their

- 19. Gossip-Style Failure DetectionIf the heartbeat has not

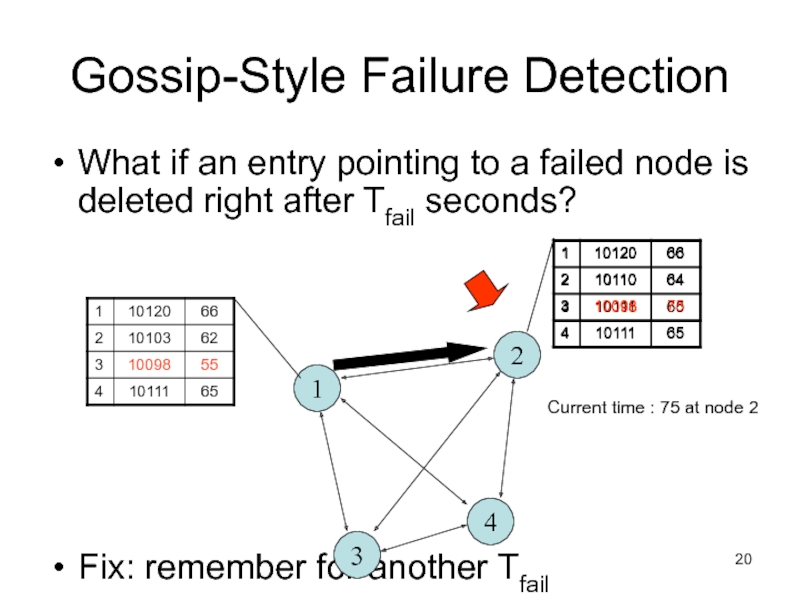

- 20. Gossip-Style Failure DetectionWhat if an entry pointing

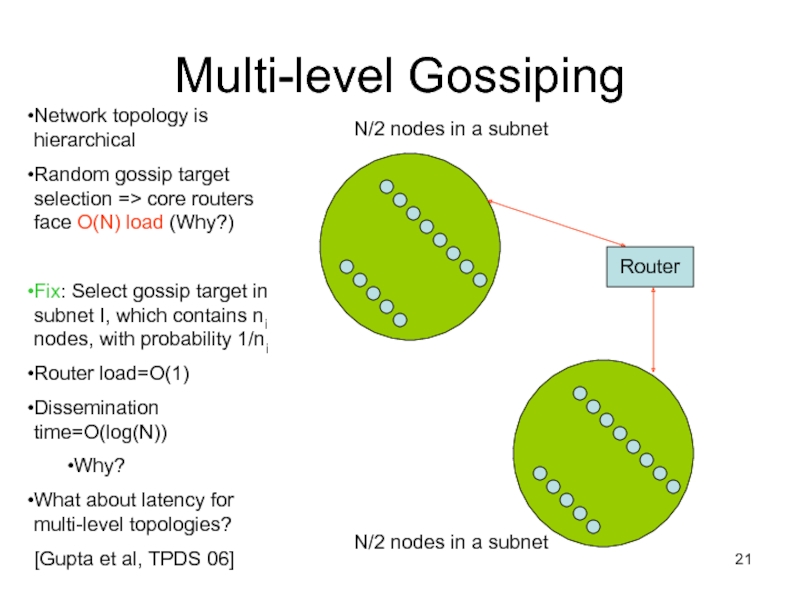

- 21. Multi-level GossipingNetwork topology is hierarchicalRandom gossip target

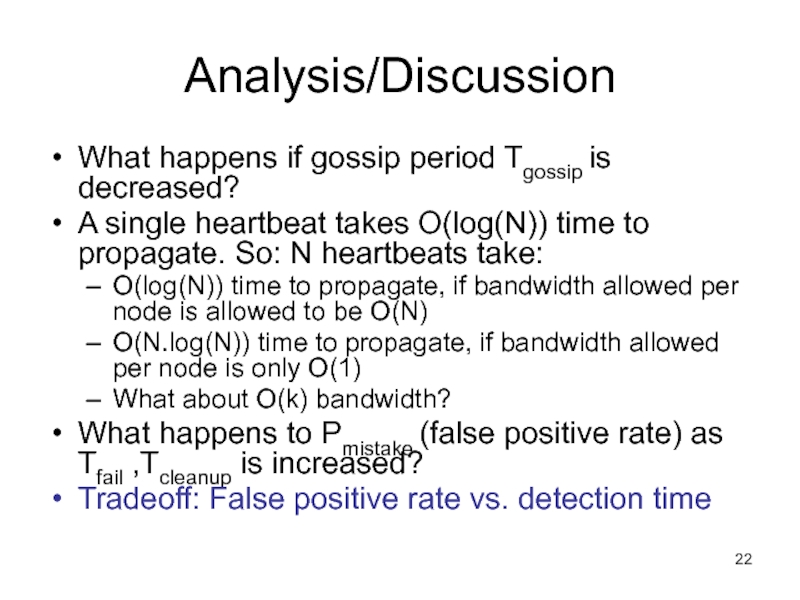

- 22. Analysis/DiscussionWhat happens if gossip period Tgossip is

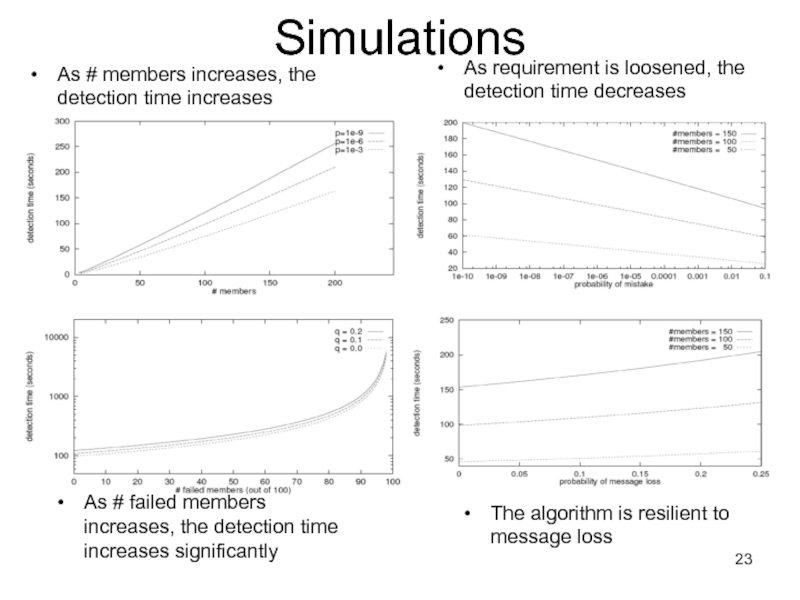

- 23. SimulationsAs # members increases, the detection time

- 24. Failure Detector Properties …CompletenessAccuracySpeedTime to first detection of a failureScaleEqual Load on each memberNetwork Message Load

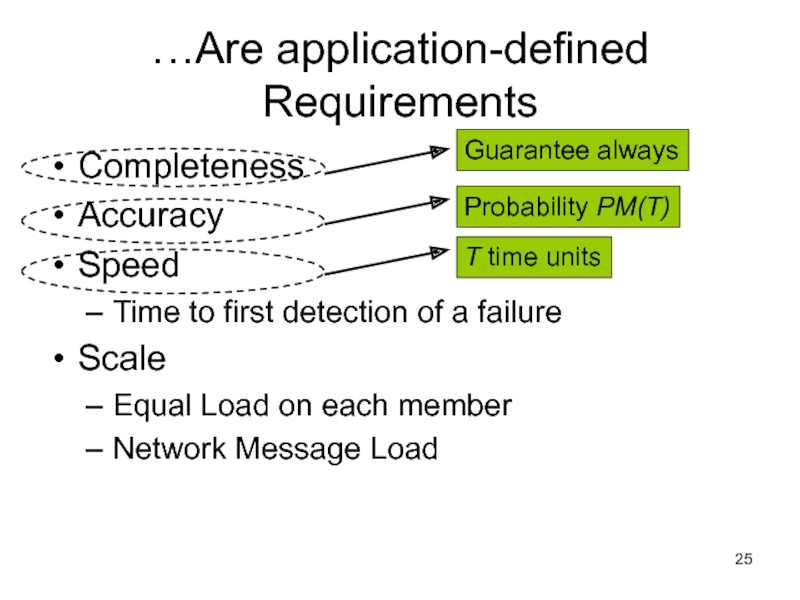

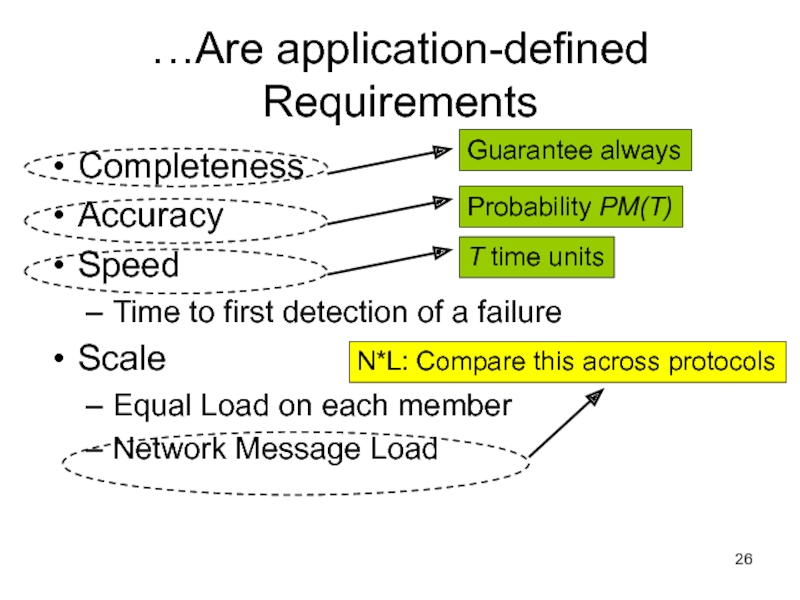

- 25. …Are application-defined RequirementsCompletenessAccuracySpeedTime to first detection of

- 26. …Are application-defined RequirementsCompletenessAccuracySpeedTime to first detection of

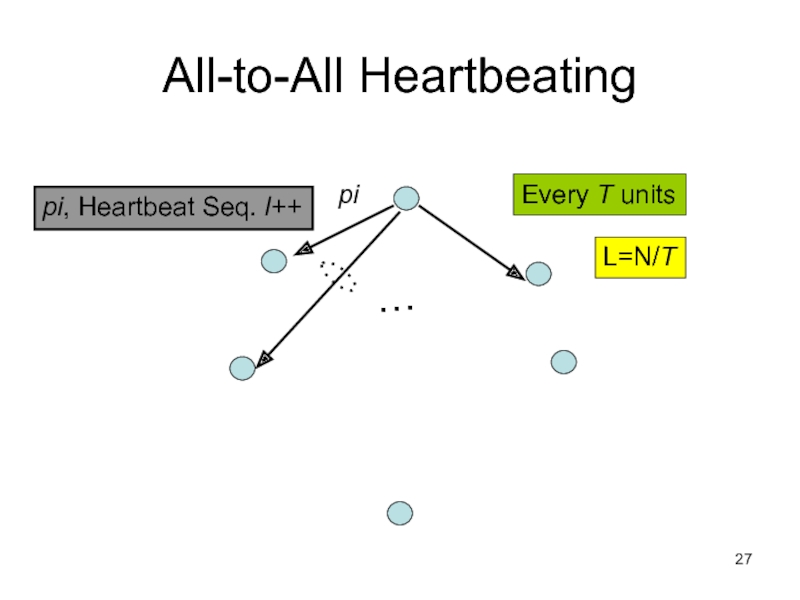

- 27. All-to-All Heartbeatingpi, Heartbeat Seq. l++…piEvery T unitsL=N/T

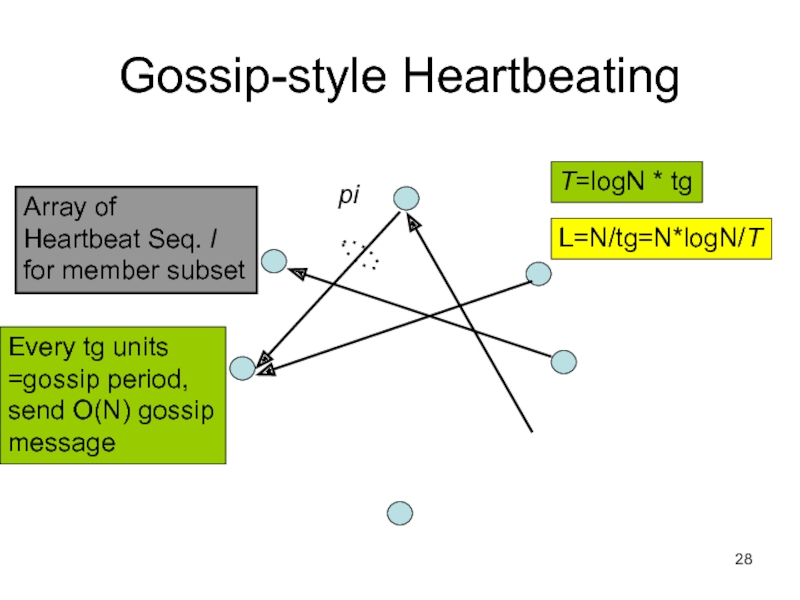

- 28. Gossip-style HeartbeatingArray of Heartbeat Seq. lfor member subsetpiEvery tg units=gossip period,send O(N) gossipmessageT=logN * tgL=N/tg=N*logN/T

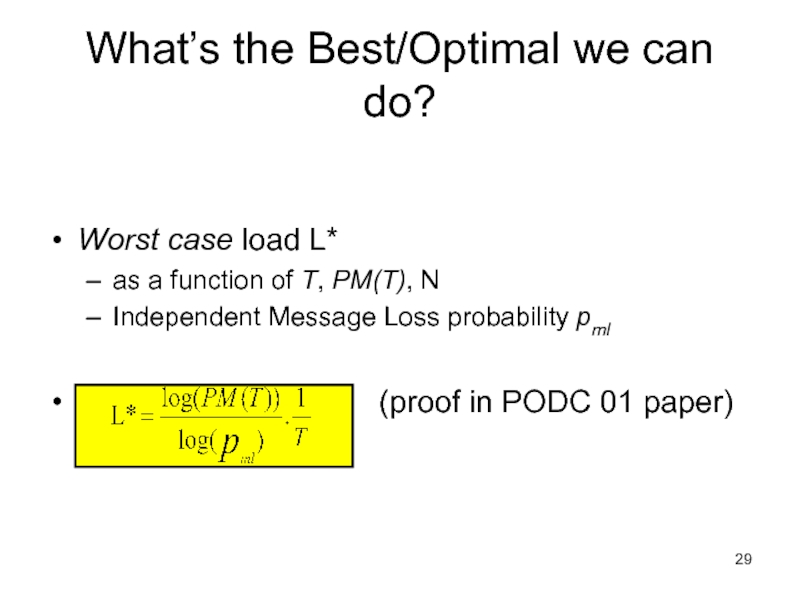

- 29. Worst case load L* as a function

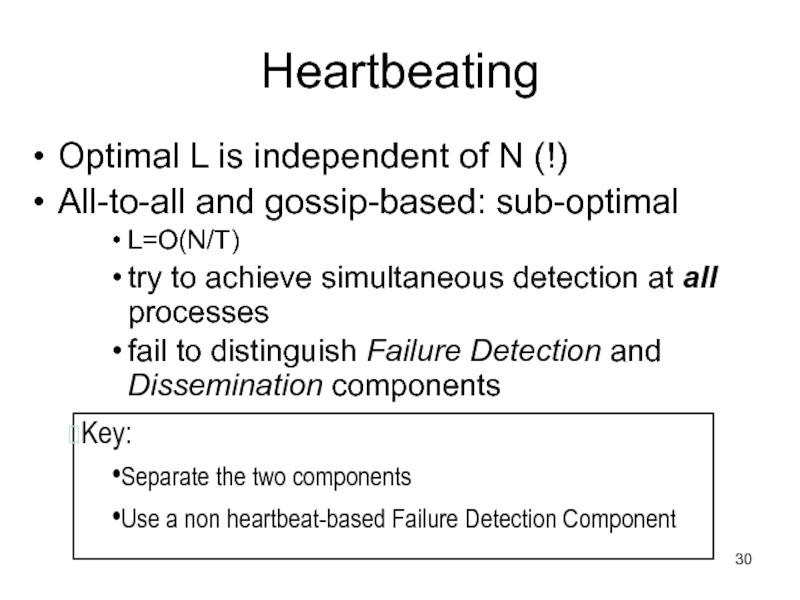

- 30. HeartbeatingOptimal L is independent of N (!)All-to-all

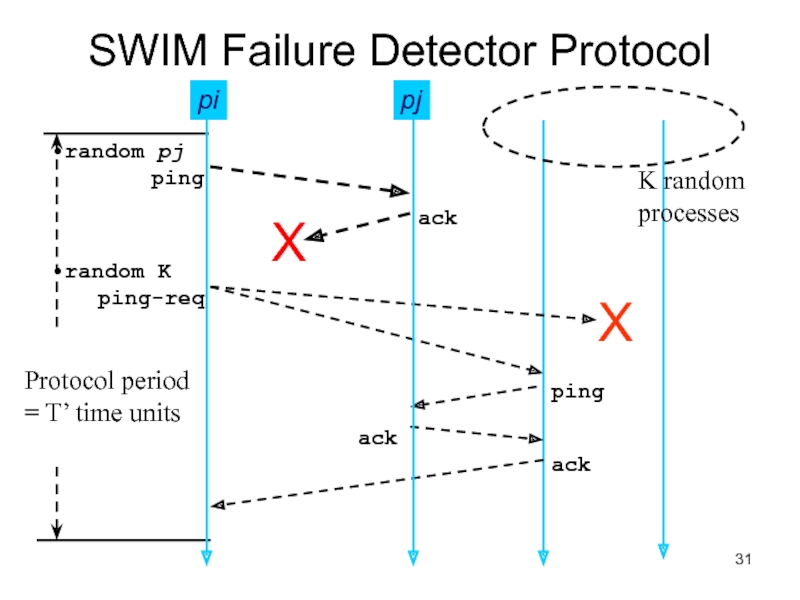

- 31. SWIM Failure Detector Protocolpj

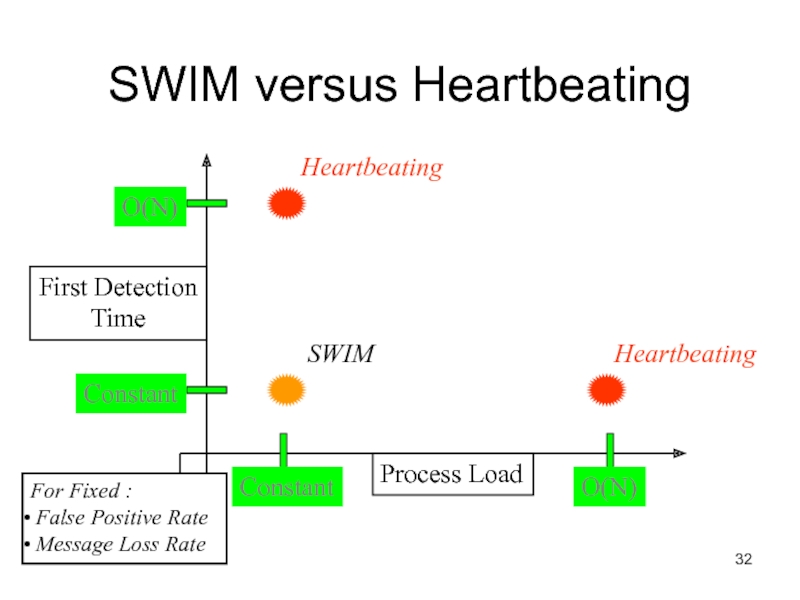

- 32. SWIM versus HeartbeatingProcess LoadFirst DetectionTimeConstantConstantO(N)O(N)SWIMFor Fixed : False Positive Rate Message Loss RateHeartbeatingHeartbeating

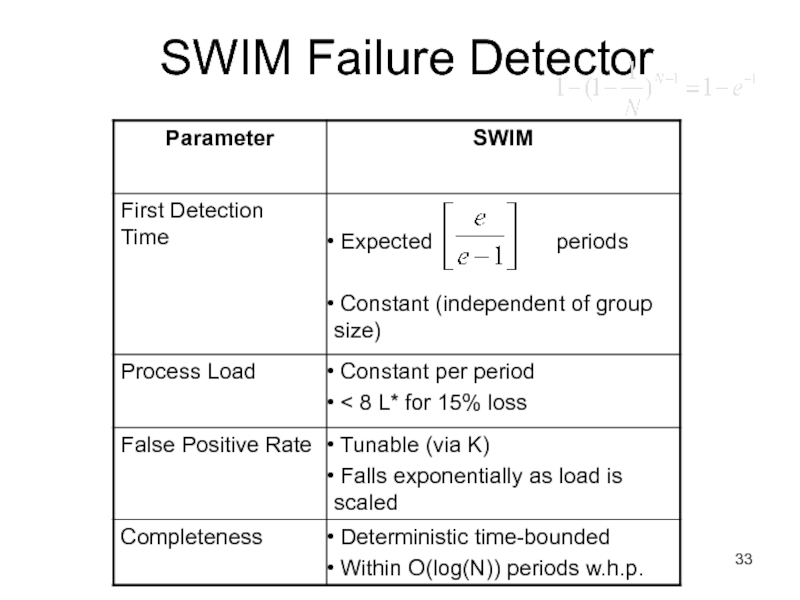

- 33. SWIM Failure Detector

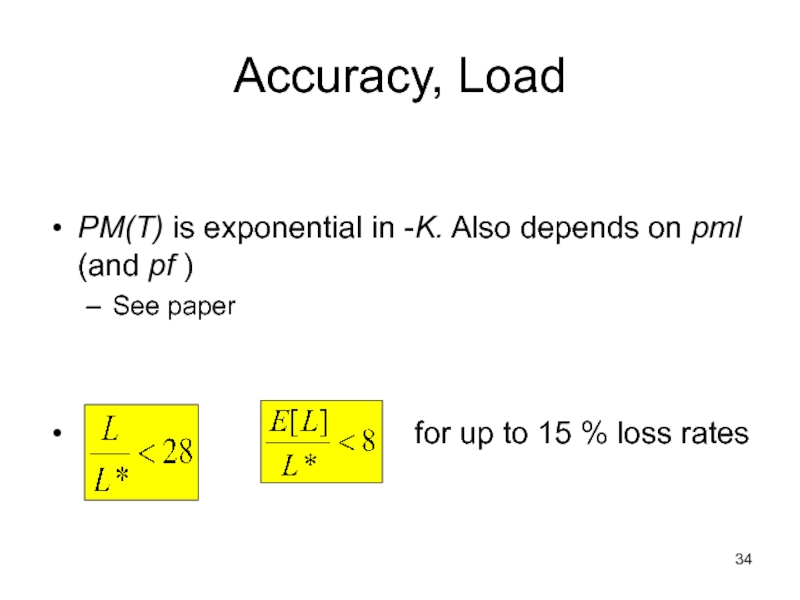

- 34. Accuracy, LoadPM(T) is exponential in -K. Also

- 35. Prob. of being pinged in T’=E[T ]

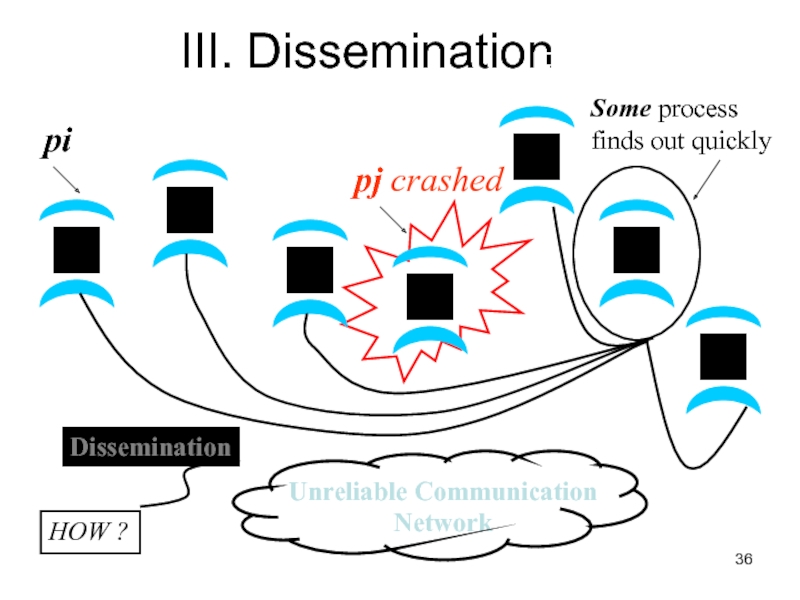

- 36. III. DisseminationHOW ?

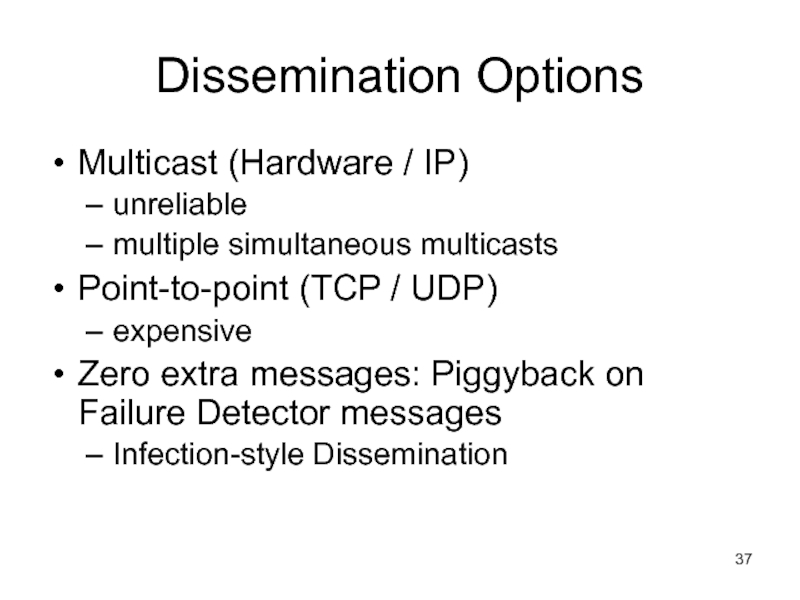

- 37. Dissemination OptionsMulticast (Hardware / IP)unreliable multiple simultaneous

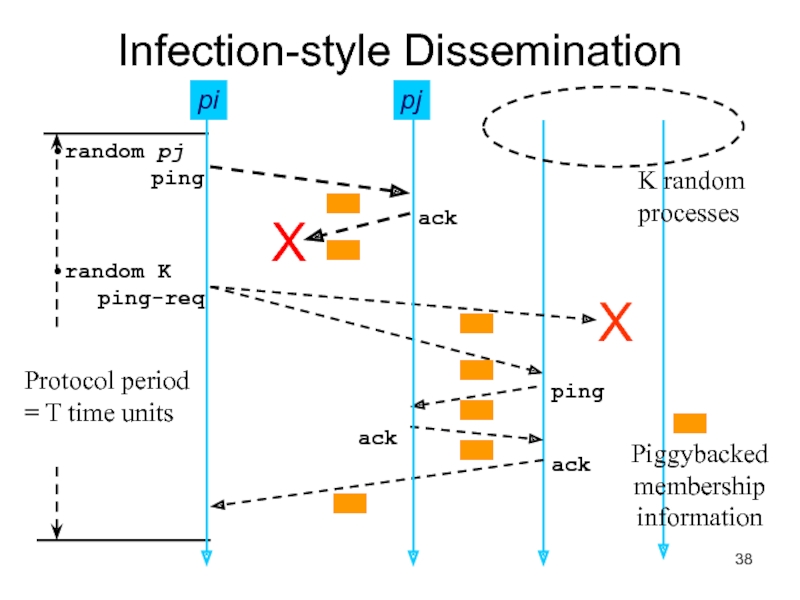

- 38. Infection-style DisseminationpjK randomprocesses

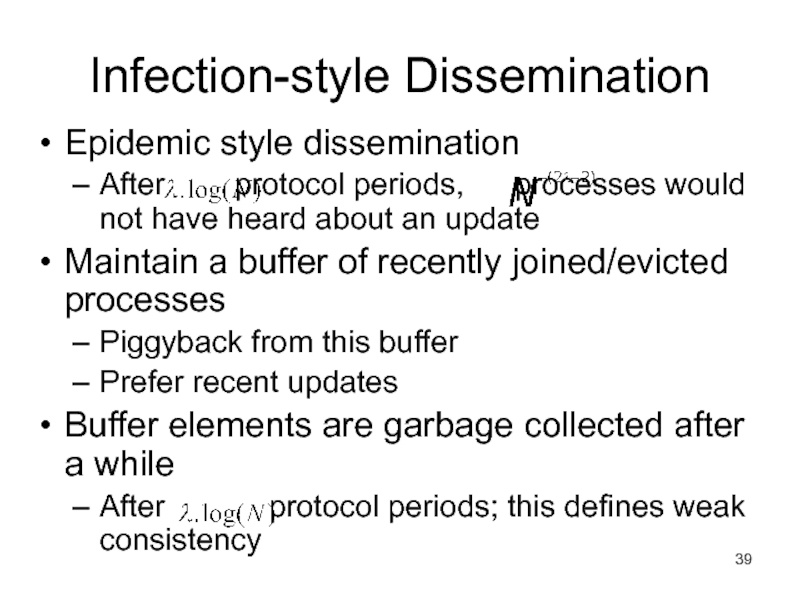

- 39. Infection-style DisseminationEpidemic style disseminationAfter protocol periods,

- 40. Suspicion MechanismFalse detections, due toPerturbed processesPacket losses,

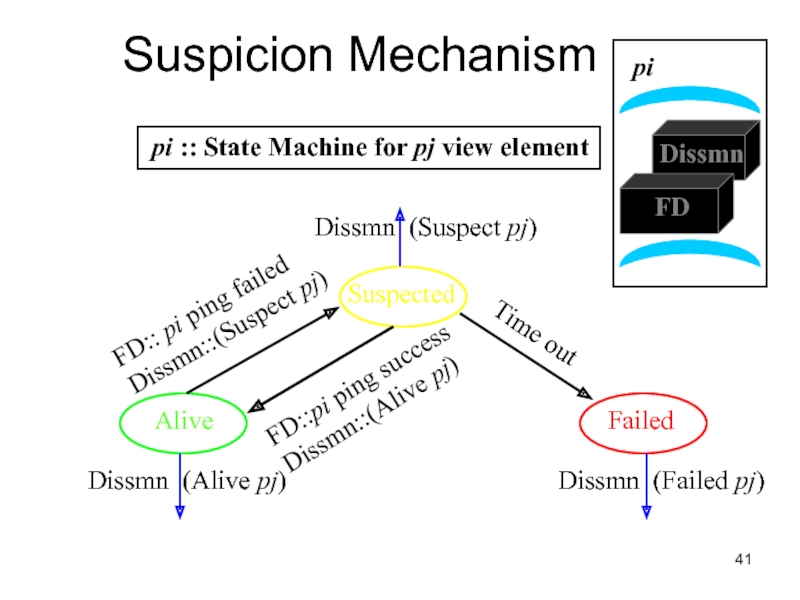

- 41. Suspicion MechanismAliveSuspectedFailedDissmn (Suspect pj)Dissmn (Alive pj)Dissmn (Failed

- 42. Suspicion MechanismDistinguish multiple suspicions of a process

- 43. Time-bounded CompletenessKey: select each membership element once

- 44. Results from an ImplementationCurrent implementationWin2K, uses Winsock

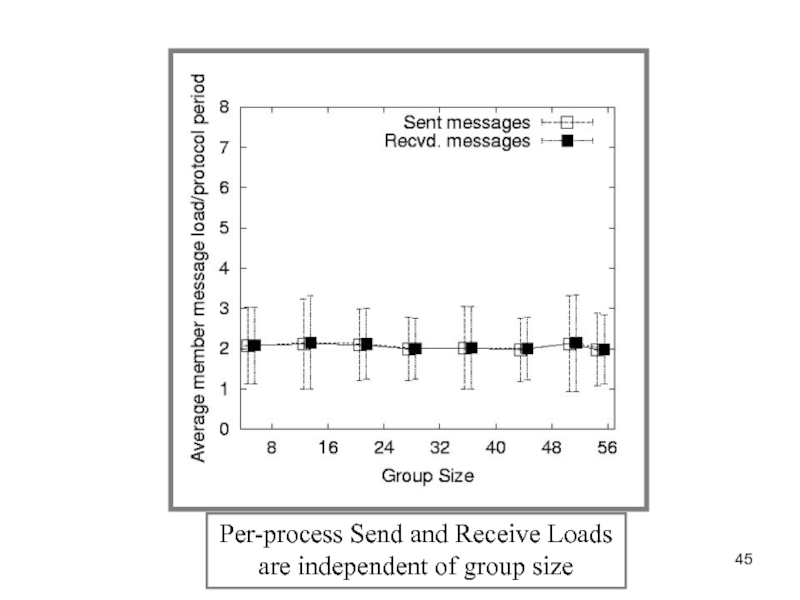

- 45. Per-process Send and Receive Loads are independent of group size

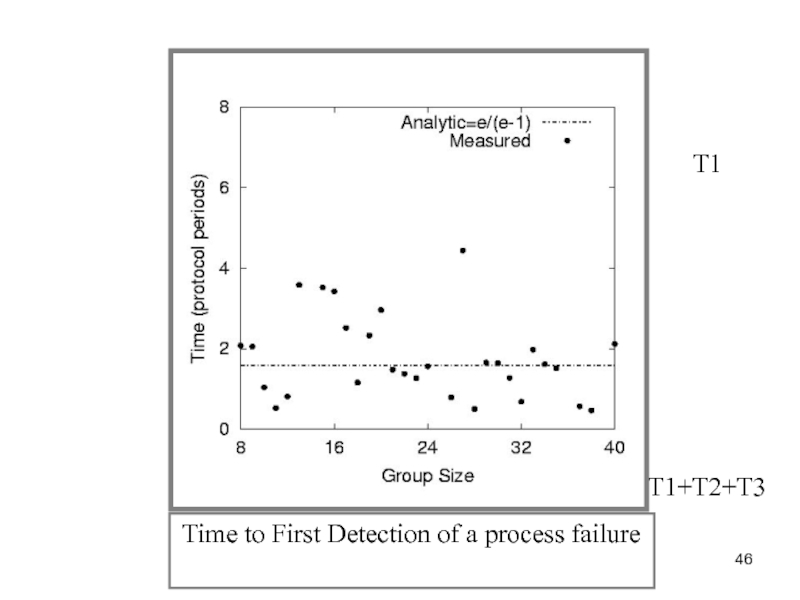

- 46. Time to First Detection of a process failure T1T1+T2+T3

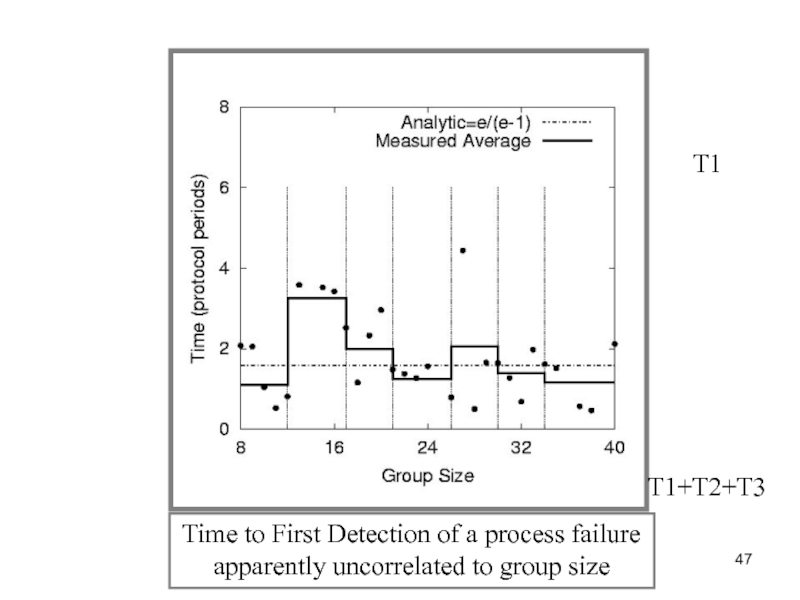

- 47. T1Time to First Detection of a process failure apparently uncorrelated to group sizeT1+T2+T3

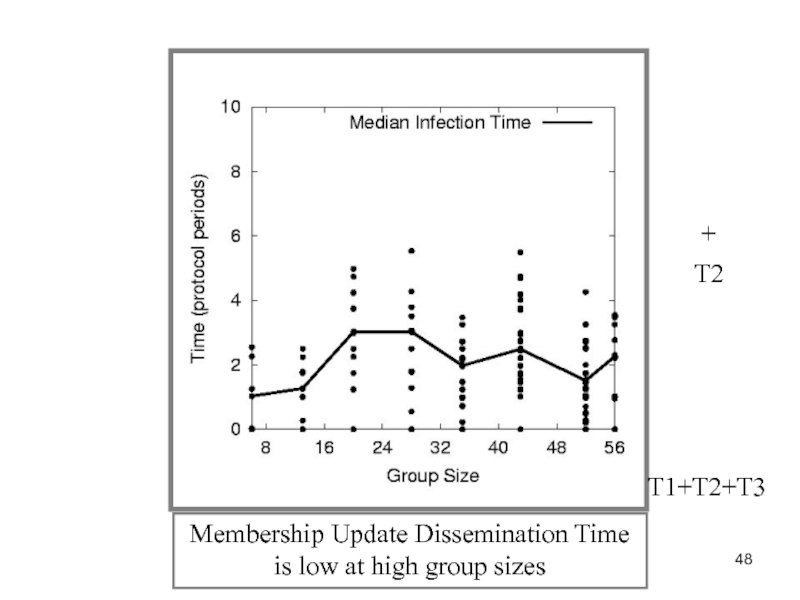

- 48. Membership Update Dissemination Time is low at high group sizesT2+T1+T2+T3

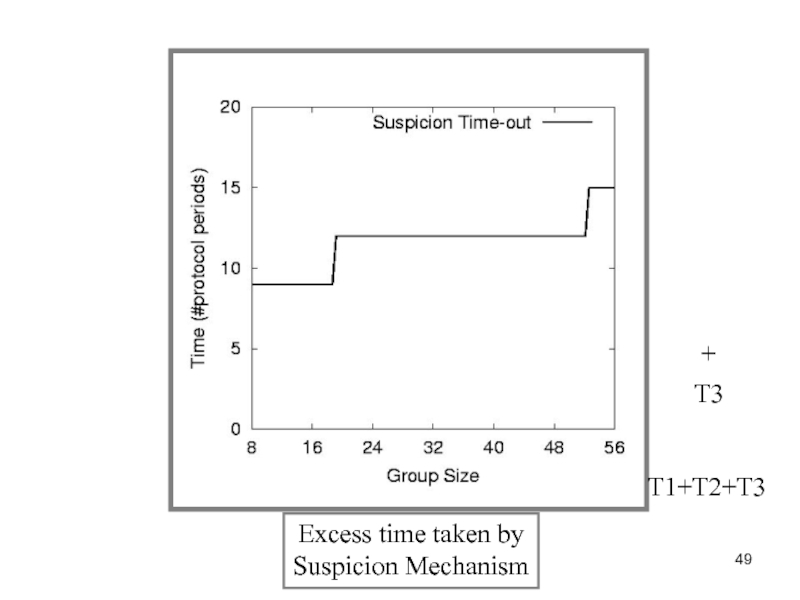

- 49. Excess time taken by Suspicion MechanismT3+T1+T2+T3

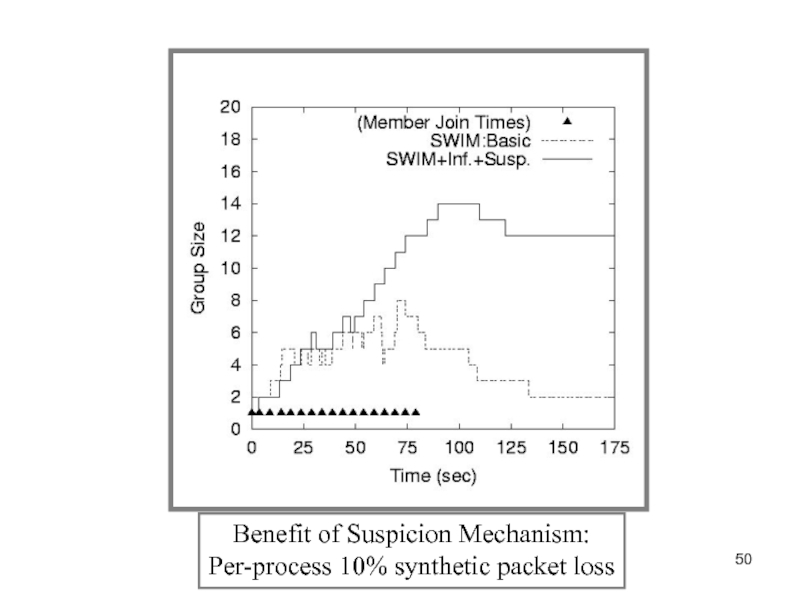

- 50. Benefit of Suspicion Mechanism:Per-process 10% synthetic packet loss

- 51. More discussion pointsIt turns out that with

- 52. Reminder – Due this Sunday April 3rd

- 53. Questions

- 54. Скачать презентанцию

Слайды и текст этой презентации

Слайд 1CS 525

Advanced Distributed Systems

Spring 2011

Indranil Gupta (Indy)

Membership Protocols (and

Failure Detectors)

Слайд 2Target Settings

Process ‘group’-based systems

Clouds/Datacenters

Replicated servers

Distributed databases

Crash-stop/Fail-stop process failures

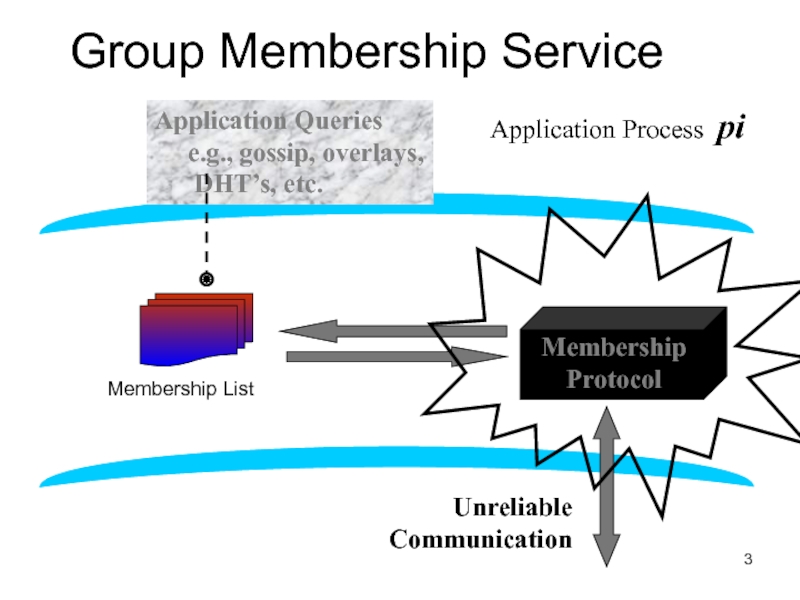

Слайд 3Group Membership Service

Application Queries

e.g., gossip, overlays, DHT’s,

etc.

Membership

Protocol

Group

Membership List

joins, leaves, failures

of members

Unreliable

Communication

Application Process pi

Membership

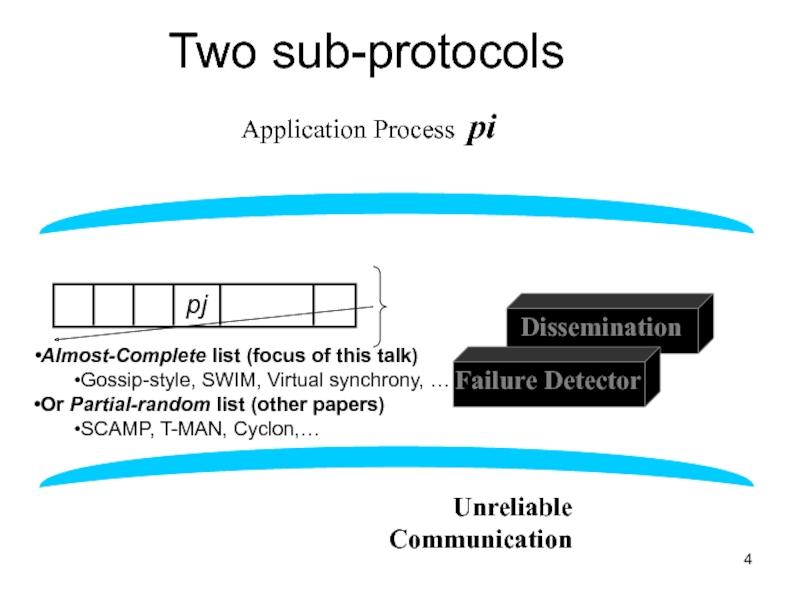

ListСлайд 4Two sub-protocols

Application Process pi

Group

Membership List

Unreliable

Communication

Almost-Complete list (focus of

this talk)

Gossip-style, SWIM, Virtual synchrony, …

Or Partial-random list (other papers)

SCAMP,

T-MAN, Cyclon,…Слайд 7I. pj crashes

Nothing we can do about it!

A

frequent occurrence

Common case rather than exception

Frequency goes up at least

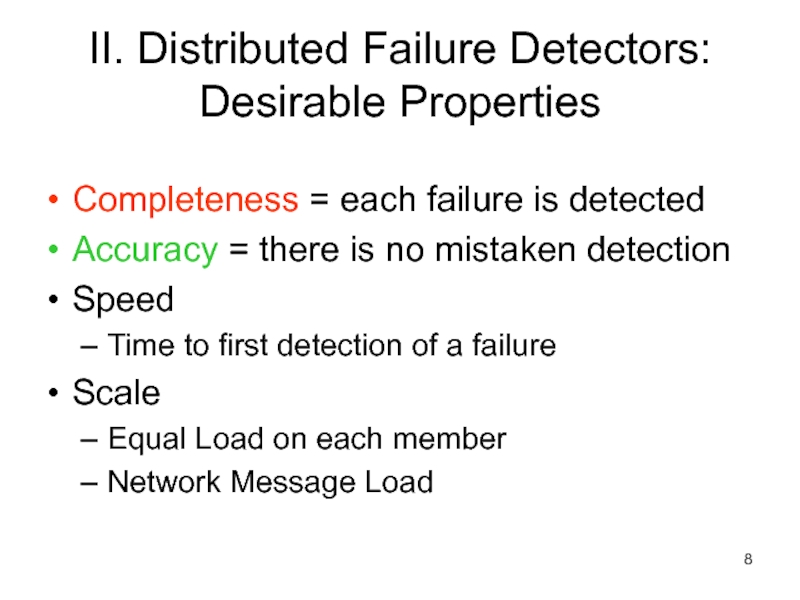

linearly with size of datacenterСлайд 8II. Distributed Failure Detectors: Desirable Properties

Completeness = each failure is

detected

Accuracy = there is no mistaken detection

Speed

Time to first detection

of a failureScale

Equal Load on each member

Network Message Load

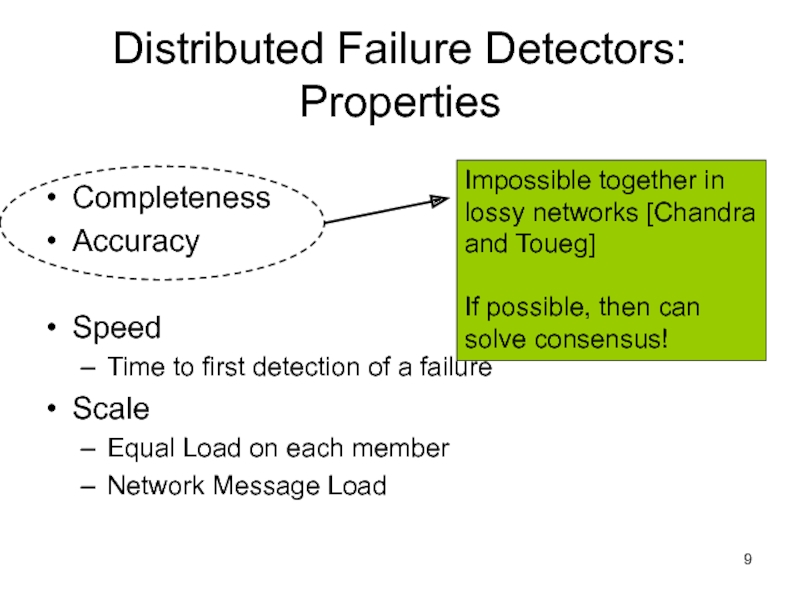

Слайд 9Distributed Failure Detectors: Properties

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal

Load on each member

Network Message Load

Слайд 10What Real Failure Detectors Prefer

Completeness

Accuracy

Speed

Time to first detection of a

failure

Scale

Equal Load on each member

Network Message Load

Guaranteed

Partial/Probabilistic

guarantee

Слайд 11Failure Detector Properties

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal Load

on each member

Network Message Load

Time until some

process detects the

failureGuaranteed

Partial/Probabilistic

guarantee

Слайд 12Failure Detector Properties

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal Load

on each member

Network Message Load

Time until some

process detects the

failureGuaranteed

Partial/Probabilistic

guarantee

No bottlenecks/single

failure point

Слайд 13Failure Detector Properties

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal Load

on each member

Network Message Load

In spite of

arbitrary simultaneous

process

failuresСлайд 14Centralized Heartbeating

…

pi, Heartbeat Seq. l++

pi

pj

Heartbeats sent periodically

If heartbeat not

received from pi within

timeout, mark pi as failed

Слайд 18Gossip-Style Failure Detection

1

2

4

3

Protocol:

Nodes periodically gossip their membership list

On receipt,

the local membership list is updated

Current time : 70 at

node 2(asynchronous clocks)

Address

Heartbeat Counter

Time (local)

Fig and animation by: Dongyun Jin and Thuy Ngyuen

Слайд 19Gossip-Style Failure Detection

If the heartbeat has not increased for more

than Tfail seconds,

the member is considered failed

And after Tcleanup

seconds, it will delete the member from the listWhy two different timeouts?

Слайд 20Gossip-Style Failure Detection

What if an entry pointing to a failed

node is deleted right after Tfail seconds?

Fix: remember for another

Tfail1

2

4

3

Current time : 75 at node 2

Слайд 21Multi-level Gossiping

Network topology is hierarchical

Random gossip target selection => core

routers face O(N) load (Why?)

Fix: Select gossip target in subnet

I, which contains ni nodes, with probability 1/niRouter load=O(1)

Dissemination time=O(log(N))

Why?

What about latency for multi-level topologies?

[Gupta et al, TPDS 06]

Router

N/2 nodes in a subnet

N/2 nodes in a subnet

Слайд 22Analysis/Discussion

What happens if gossip period Tgossip is decreased?

A single

heartbeat takes O(log(N)) time to propagate. So: N heartbeats take:

O(log(N)) time to propagate, if bandwidth allowed per node is allowed to be O(N)

O(N.log(N)) time to propagate, if bandwidth allowed per node is only O(1)

What about O(k) bandwidth?

What happens to Pmistake (false positive rate) as Tfail ,Tcleanup is increased?

Tradeoff: False positive rate vs. detection time

Слайд 23Simulations

As # members increases, the detection time increases

As requirement is

loosened, the detection time decreases

As # failed members increases, the

detection time increases significantlyThe algorithm is resilient to message loss

Слайд 24Failure Detector Properties …

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal

Load on each member

Network Message Load

Слайд 25…Are application-defined Requirements

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal Load

on each member

Network Message Load

Guarantee always

Probability PM(T)

T time units

Слайд 26…Are application-defined Requirements

Completeness

Accuracy

Speed

Time to first detection of a failure

Scale

Equal Load

on each member

Network Message Load

Guarantee always

Probability PM(T)

T time units

N*L: Compare

this across protocolsСлайд 28Gossip-style Heartbeating

Array of

Heartbeat Seq. l

for member subset

pi

Every tg units

=gossip

period,

send O(N) gossip

message

T=logN * tg

L=N/tg=N*logN/T

Слайд 29

Worst case load L*

as a function of T, PM(T),

N

Independent Message Loss probability pml

(proof in PODC 01 paper) What’s the Best/Optimal we can do?

Слайд 30Heartbeating

Optimal L is independent of N (!)

All-to-all and gossip-based: sub-optimal

L=O(N/T)

try

to achieve simultaneous detection at all processes

fail to distinguish Failure

Detection and Dissemination componentsKey:

Separate the two components

Use a non heartbeat-based Failure Detection Component

Слайд 32SWIM versus Heartbeating

Process Load

First Detection

Time

Constant

Constant

O(N)

O(N)

SWIM

For Fixed :

False Positive Rate

Message Loss Rate

Heartbeating

Heartbeating

Слайд 34Accuracy, Load

PM(T) is exponential in -K. Also depends on pml

(and pf )

See paper

for up to 15 % loss rates Слайд 35Prob. of being pinged in T’=

E[T ] =

Completeness: Any

alive member detects failure

Eventually

By using a trick: within worst case

O(N) protocol periodsDetection Time

Слайд 37Dissemination Options

Multicast (Hardware / IP)

unreliable

multiple simultaneous multicasts

Point-to-point (TCP /

UDP)

expensive

Zero extra messages: Piggyback on Failure Detector messages

Infection-style Dissemination

Слайд 39Infection-style Dissemination

Epidemic style dissemination

After protocol periods, processes would not

have heard about an update

Maintain a buffer of recently joined/evicted

processesPiggyback from this buffer

Prefer recent updates

Buffer elements are garbage collected after a while

After protocol periods; this defines weak consistency

Слайд 40Suspicion Mechanism

False detections, due to

Perturbed processes

Packet losses, e.g., from congestion

Indirect

pinging may not solve the problem

e.g., correlated message losses near

pinged hostKey: suspect a process before declaring it as failed in the group

Слайд 41Suspicion Mechanism

Alive

Suspected

Failed

Dissmn (Suspect pj)

Dissmn (Alive pj)

Dissmn (Failed pj)

pi ::

State Machine for pj view element

FD:: pi ping failed

Dissmn::(Suspect pj)

Time

outFD::pi ping success

Dissmn::(Alive pj)

Слайд 42Suspicion Mechanism

Distinguish multiple suspicions of a process

Per-process incarnation number

Inc # for pi can be incremented only by pi

e.g.,

when it receives a (Suspect, pi) messageSomewhat similar to DSDV

Higher inc# notifications over-ride lower inc#’s

Within an inc#: (Suspect inc #) > (Alive, inc #)

Nothing overrides a (Failed, inc #)

See paper

Слайд 43Time-bounded Completeness

Key: select each membership element once as a ping

target in a traversal

Round-robin pinging

Random permutation of list after each

traversalEach failure is detected in worst case 2N-1 (local) protocol periods

Preserves FD properties

Слайд 44Results from an Implementation

Current implementation

Win2K, uses Winsock 2

Uses only UDP

messaging

900 semicolons of code (including testing)

Experimental platform

Galaxy cluster: diverse collection

of commodity PCs100 Mbps Ethernet

Default protocol settings

Protocol period=2 s; K=1; G.C. and Suspicion timeouts=3*ceil[log(N+1)]

No partial membership lists observed in experiments

Слайд 47T1

Time to First Detection of a process failure

apparently uncorrelated

to group size

T1+T2+T3

Слайд 51More discussion points

It turns out that with a partial membership

list that is uniformly random, gossiping retains same properties as

with complete membership listsWhy? (Think of the equation)

Partial membership protocols

SCAMP, Cyclon, TMAN, …

Gossip-style failure detection underlies

Astrolabe

Amazon EC2/S3 (rumored!)

SWIM used in

CoralCDN/Oasis anycast service: http://oasis.coralcdn.org

Mike Freedman used suspicion mechanism to blackmark frequently-failing nodes

Слайд 52Reminder – Due this Sunday April 3rd at 11.59 PM

Project

Midterm Report due, 11.59 pm [12pt font, single-sided, 8 +

1 page Business Plan max]Wiki Term Paper - Second Draft Due (Individual)

Reviews – you only have to submit reviews for 15 sessions (any 15 sessions) from 2/10 to 4/28. Keep track of your count! Take a breather!

![1

CS 525 Advanced Distributed Systems Spring 2011

Indranil Gupta Prob. of being pinged in T’=E[T ] = Completeness: Any alive Prob. of being pinged in T’=E[T ] = Completeness: Any alive member detects failureEventuallyBy using a trick:](/img/thumbs/e915740e716708fe7fde4f40378ca645-800x.jpg)