Разделы презентаций

- Разное

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Геометрия

- Детские презентации

- Информатика

- История

- Литература

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

cedec2011_RealtimePBR_Implementation_e

Содержание

- 1. cedec2011_RealtimePBR_Implementation_e

- 2. Image Based Lighting (IBL)Lighting that uses a

- 3. Physically Based IBLAd-hoc IBL vs. Physically-based IBLHas

- 4. Physically Based IBL advantagesGuarantees a rendering result

- 5. PBIBL implementationImplementing IBL as an approximation of

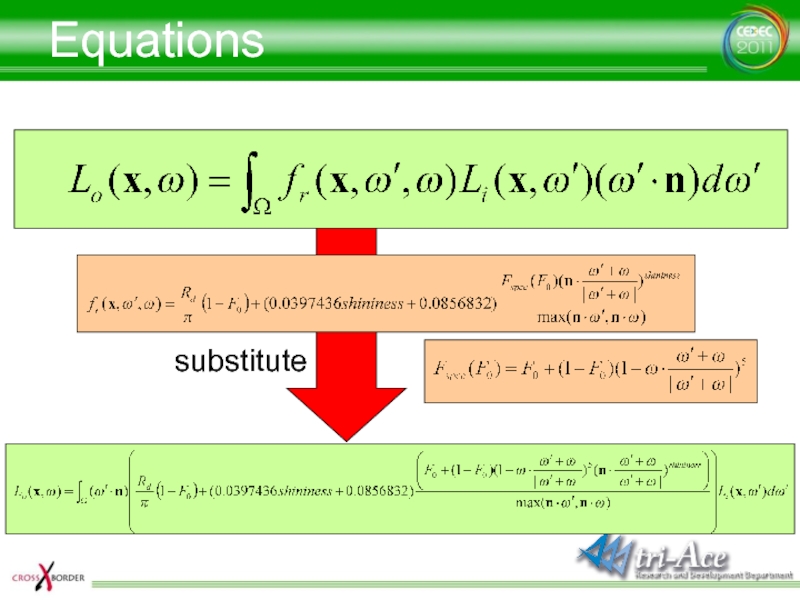

- 6. Equations substitute

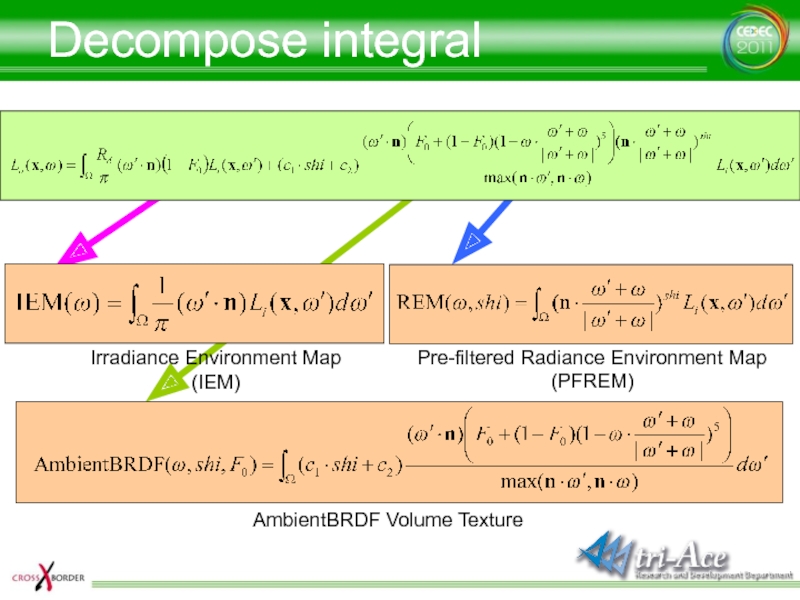

- 7. Decompose integral Irradiance Environment Map (IEM)Pre-filtered Radiance Environment Map (PFREM)AmbientBRDF Volume Texture

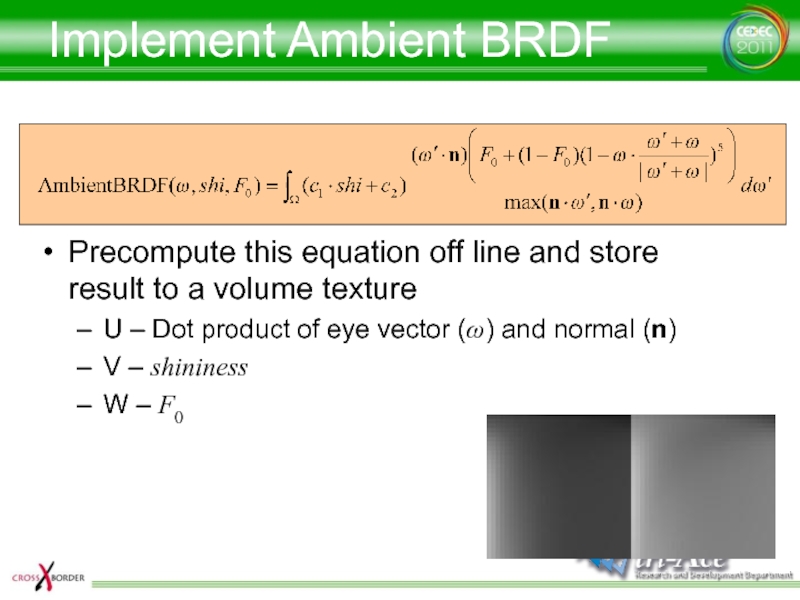

- 8. Implement Ambient BRDFPrecompute this equation off line

- 9. AmbientBRDF texture usageFetch the textureFor specular componentUse

- 10. AmbientBRDF comparisonAmbientBRDF OFFAmbientBRDF ON

- 11. Generate texturesUse AMD CubeMapGen?It can't be used

- 12. Generate texturesUse AMD CubeMapGen?It can't be used

- 13. Generate IEMImplement this equation straightforwardly on GPUDiffuse

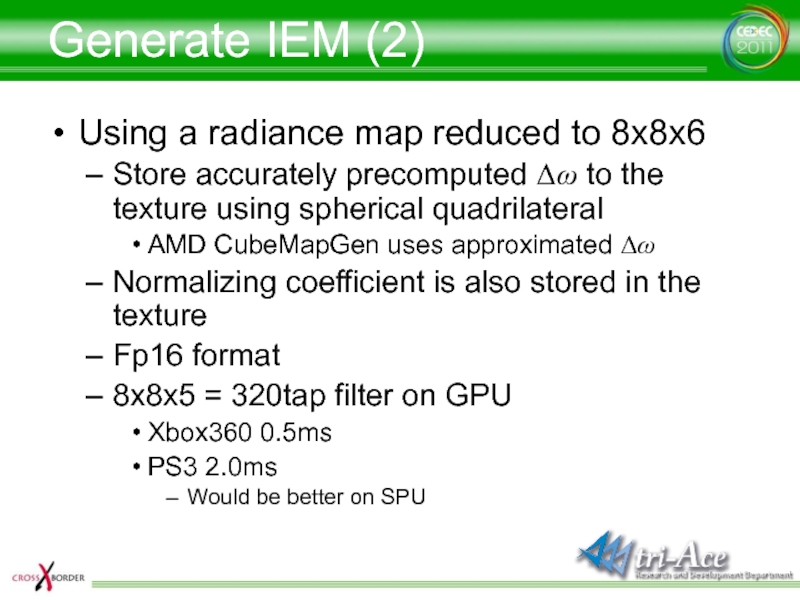

- 14. Generate IEM (2) Using a radiance map reduced

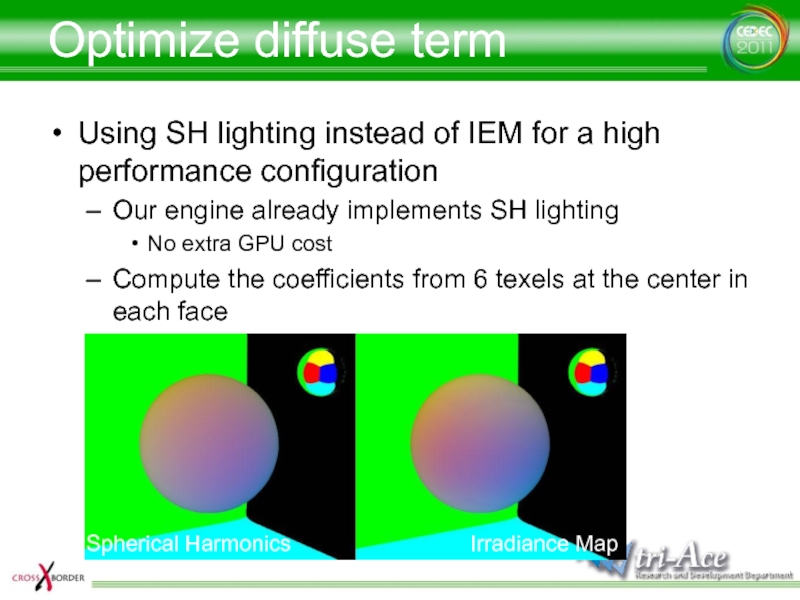

- 15. Optimize diffuse termUsing SH lighting instead of

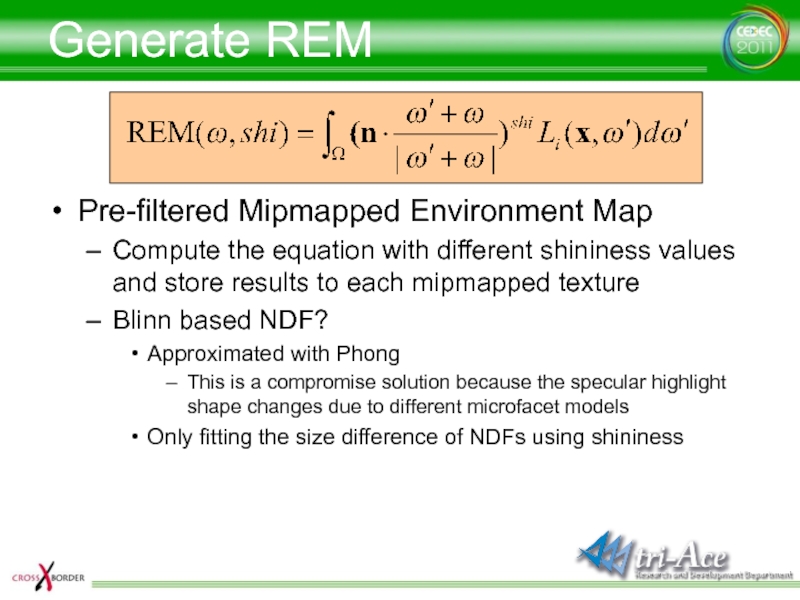

- 16. Generate REMPre-filtered Mipmapped Environment MapCompute the equation

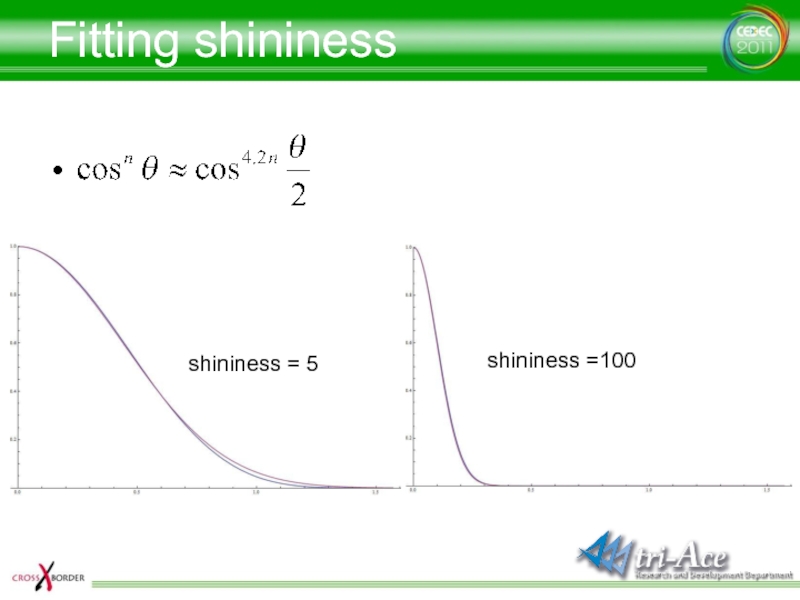

- 17. Fitting shininess

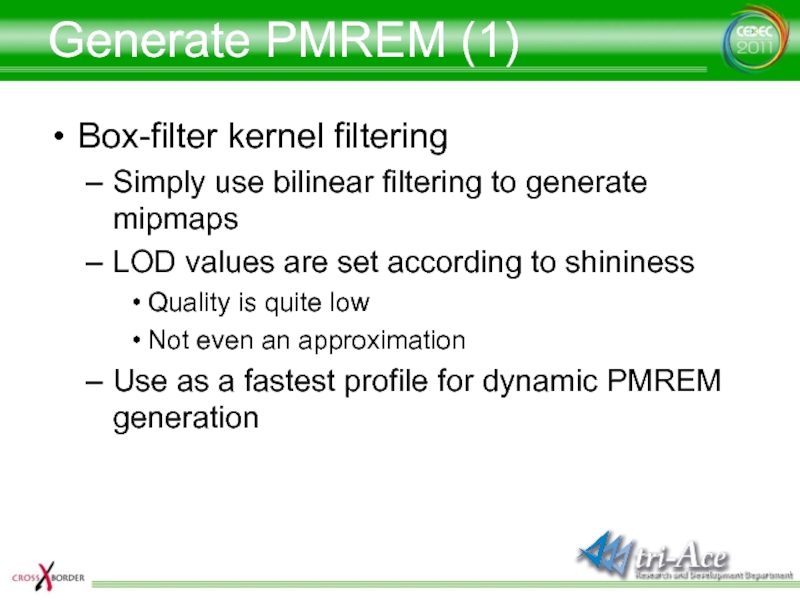

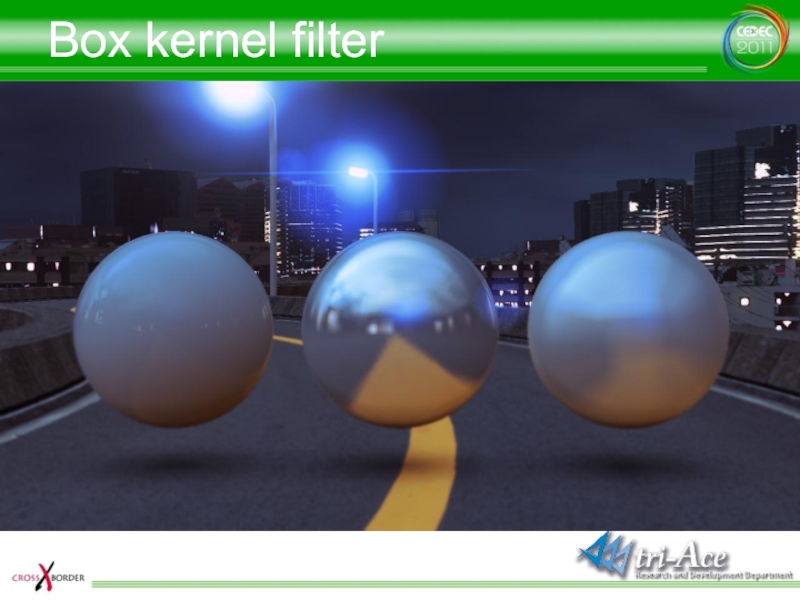

- 18. Generate PMREM (1)Box-filter kernel filteringSimply use bilinear

- 19. Box kernel filter

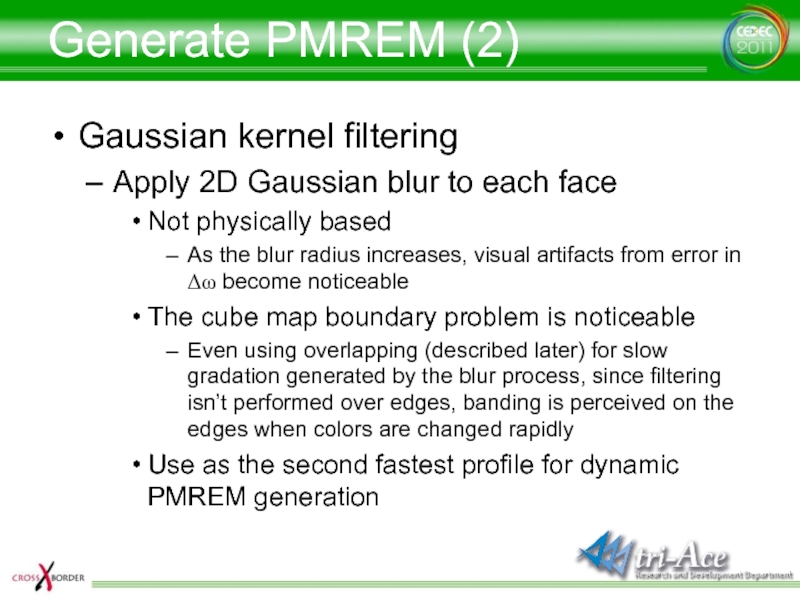

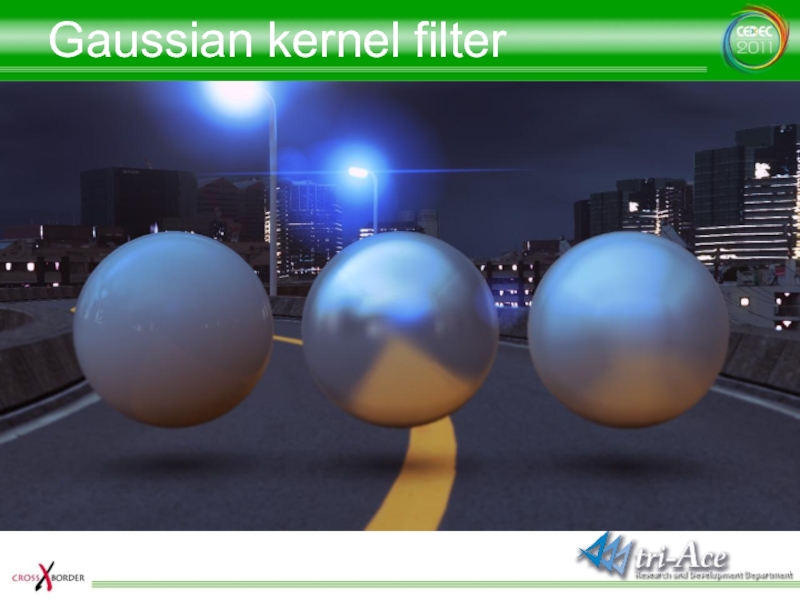

- 20. Generate PMREM (2)Gaussian kernel filteringApply 2D Gaussian

- 21. Gaussian kernel filter

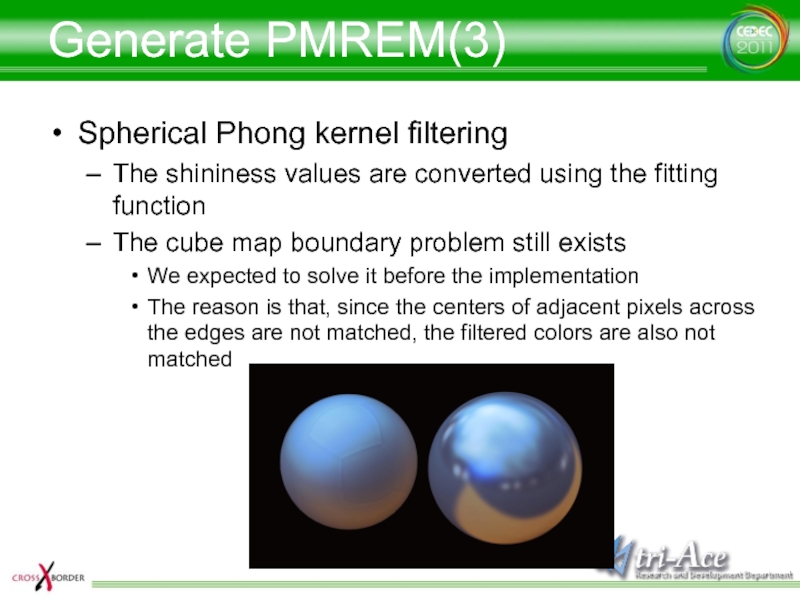

- 22. Generate PMREM(3)Spherical Phong kernel filteringThe shininess values

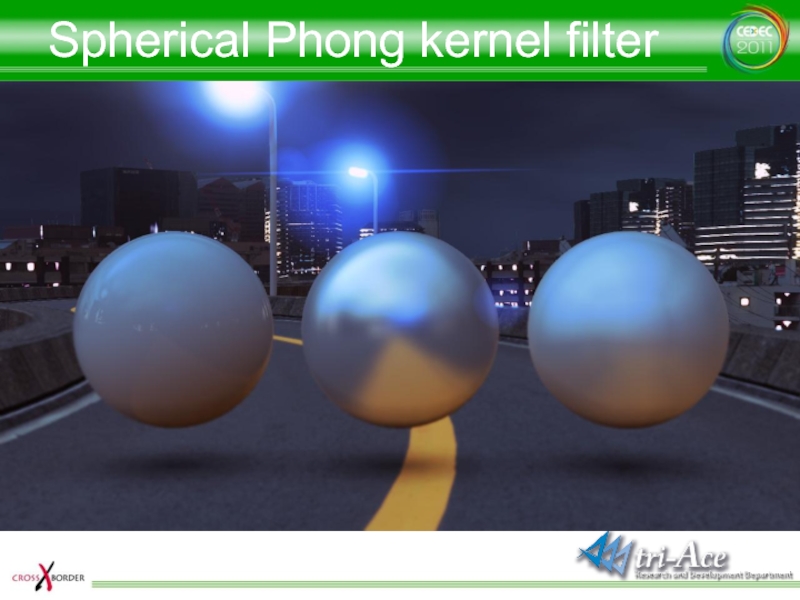

- 23. Spherical Phong kernel filter

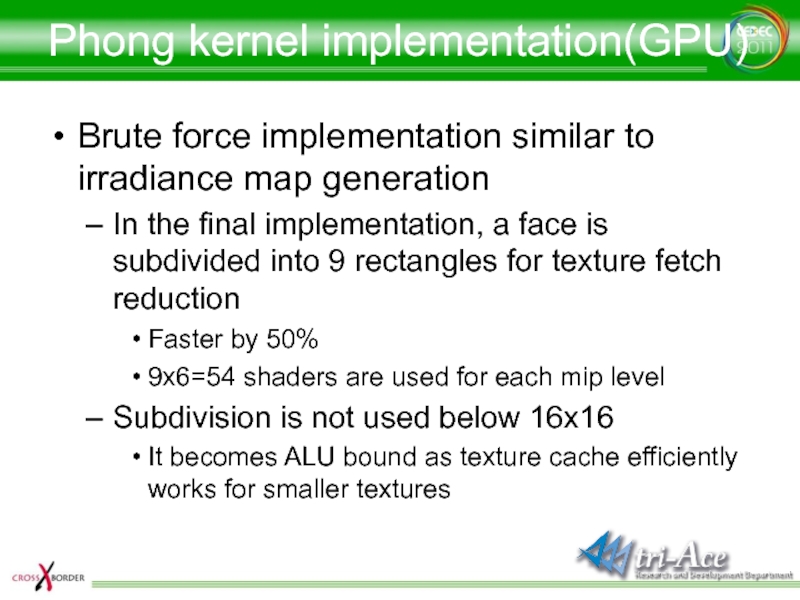

- 24. Phong kernel implementation(GPU)Brute force implementation similar to

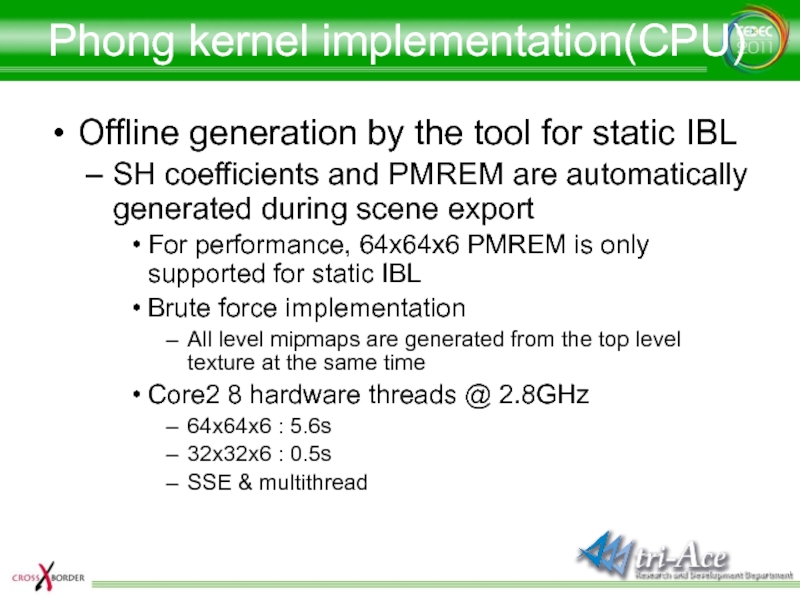

- 25. Phong kernel implementation(CPU)Offline generation by the tool

- 26. Generate PFREM (4)Poisson kernel filteringImplemented a faster

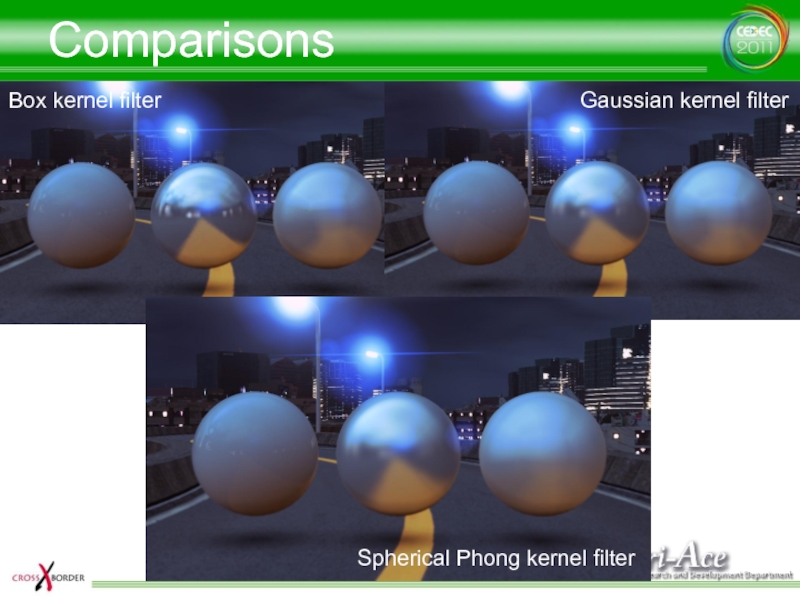

- 27. ComparisonsBox kernel filterGaussian kernel filterSpherical Phong kernel filterSpherical Phong kernel filter

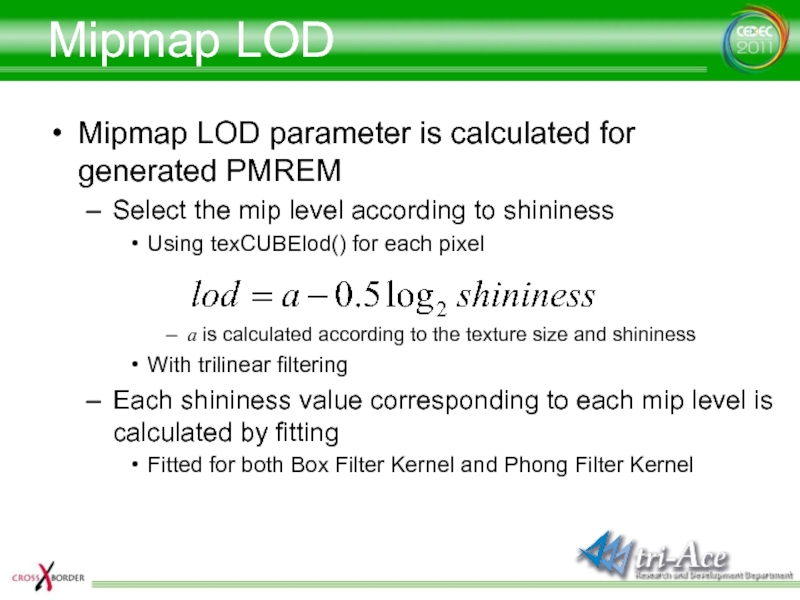

- 28. Mipmap LODMipmap LOD parameter is calculated for

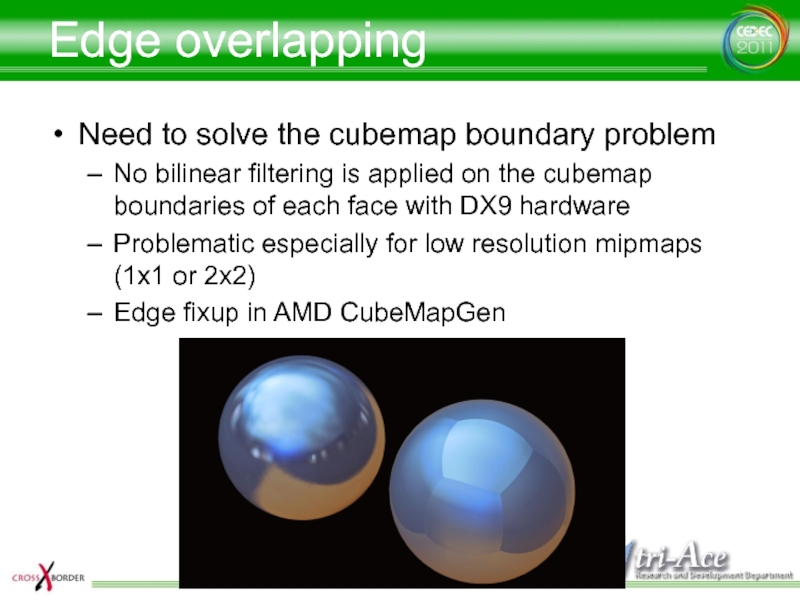

- 29. Edge overlappingNeed to solve the cubemap boundary

- 30. Edge overlapping (1)Blend adjacent boundaries by 50%Simplified

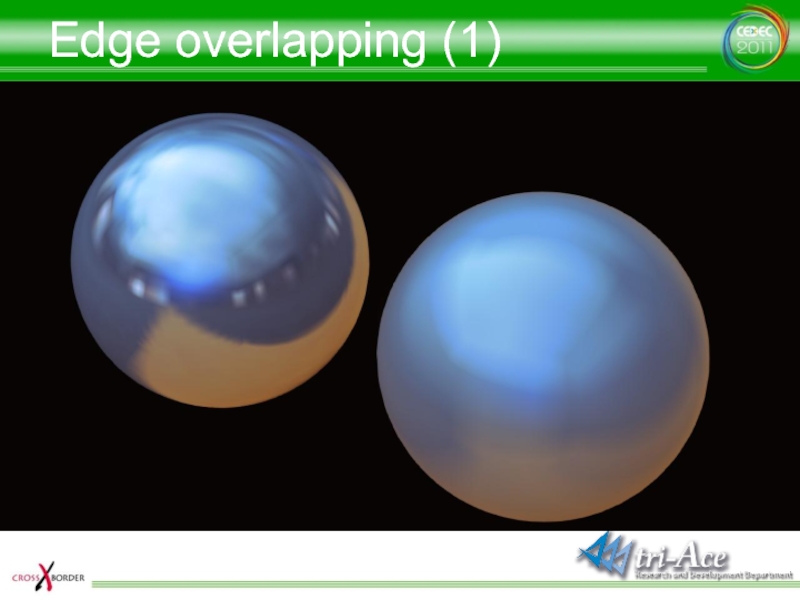

- 31. Edge overlapping (1)

- 32. Edge overlapping (2)Blend multiple texelsFor the next

- 33. Edge overlapping (3)4 texel blend?More blends don’t

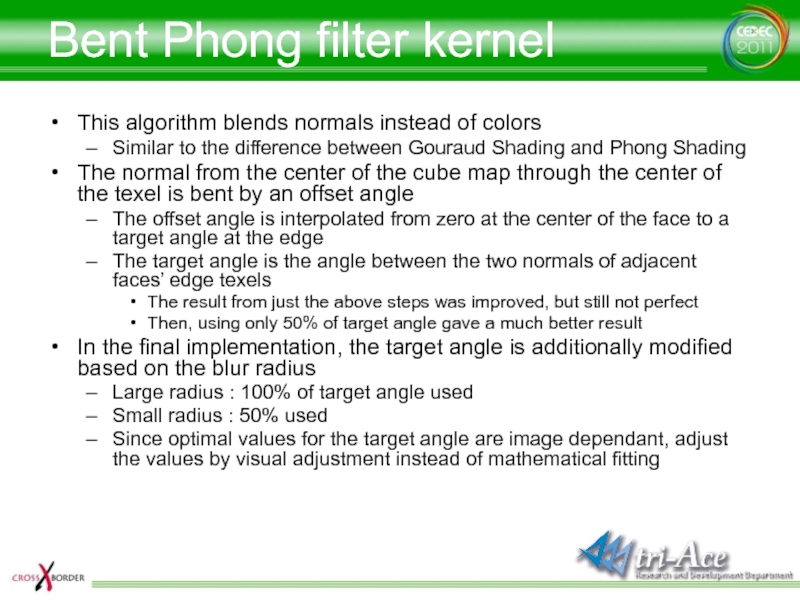

- 34. Bent Phong filter kernelThis algorithm blends normals

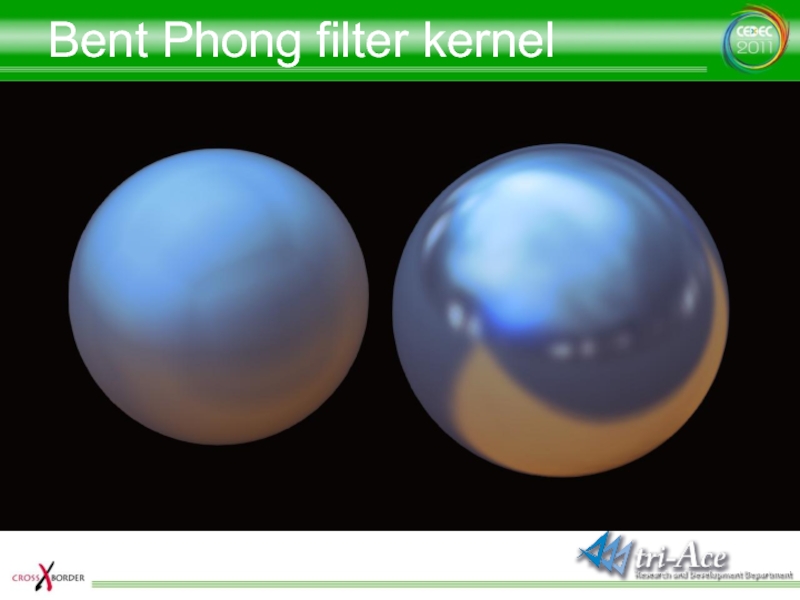

- 35. Bent Phong filter kernel

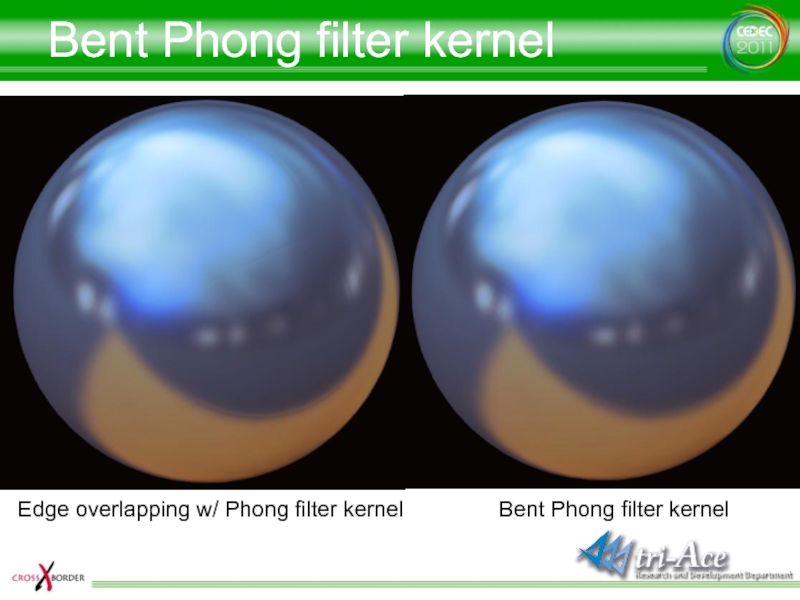

- 36. Bent Phong filter kernelBent Phong filter kernelEdge overlapping w/ Phong filter kernel

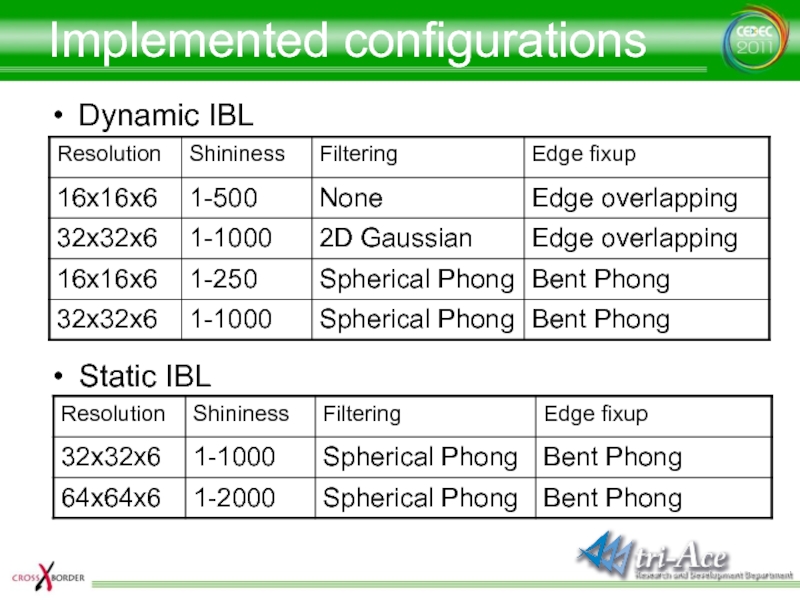

- 37. Implemented configurationsDynamic IBLStatic IBL

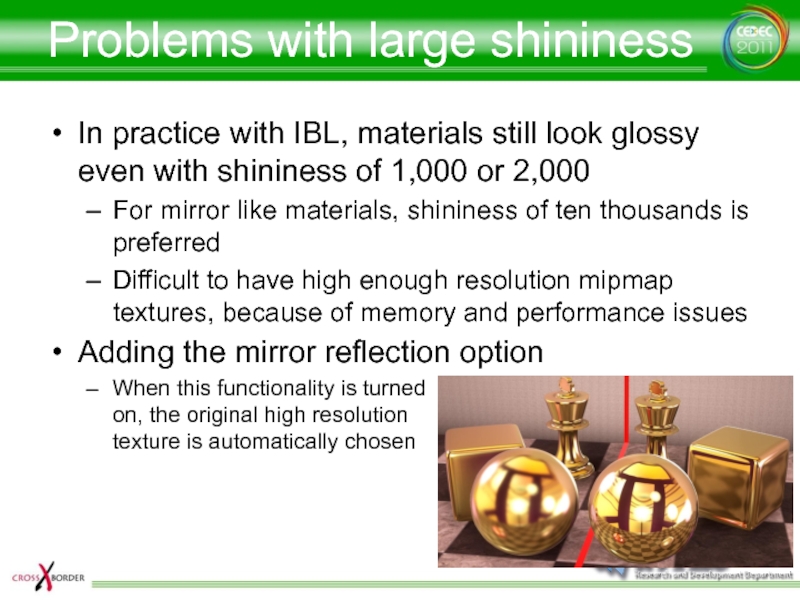

- 38. Problems with large shininessIn practice with IBL,

- 39. IBL BlendingBlending is necessary when using multiple

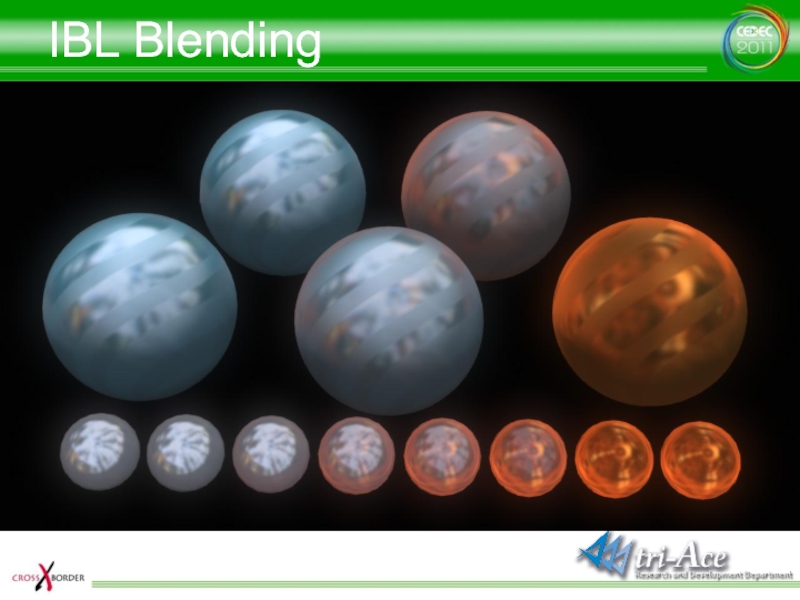

- 40. IBL Blending

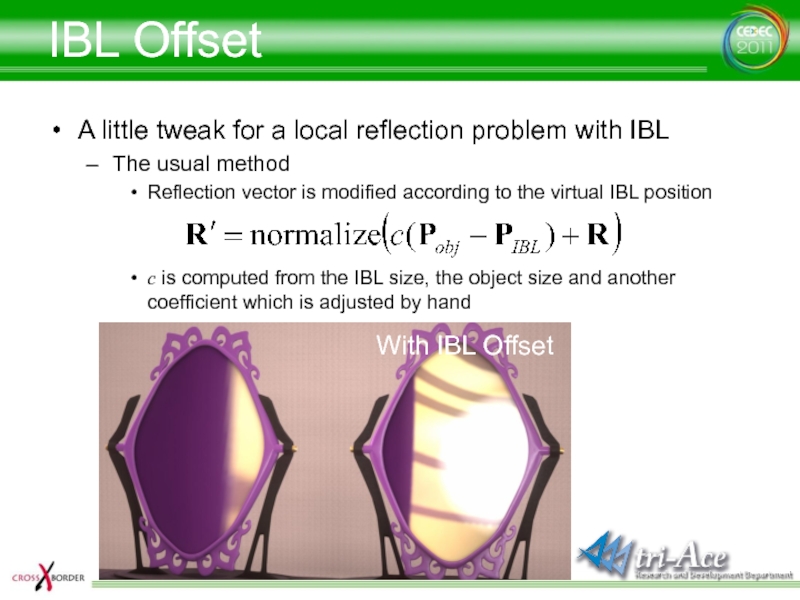

- 41. IBL OffsetA little tweak for a local

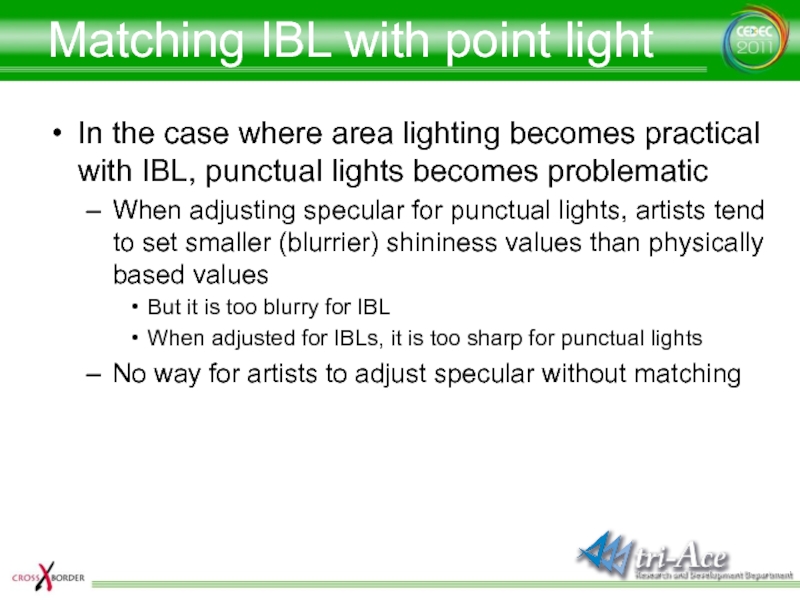

- 42. Matching IBL with point lightIn the case

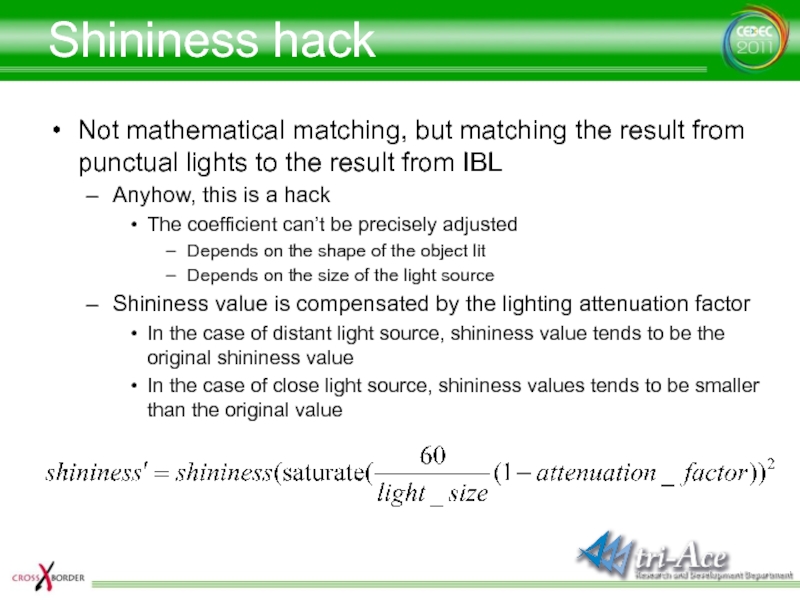

- 43. Shininess hackNot mathematical matching, but matching the

- 44. Shininess hack

- 45. Shininess hack

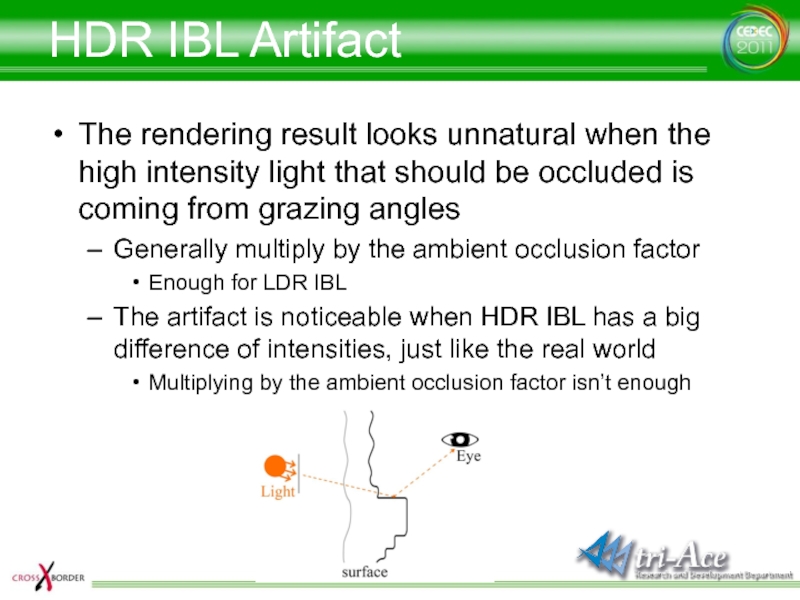

- 46. HDR IBL ArtifactThe rendering result looks unnatural

- 47. HDR IBL Artifact

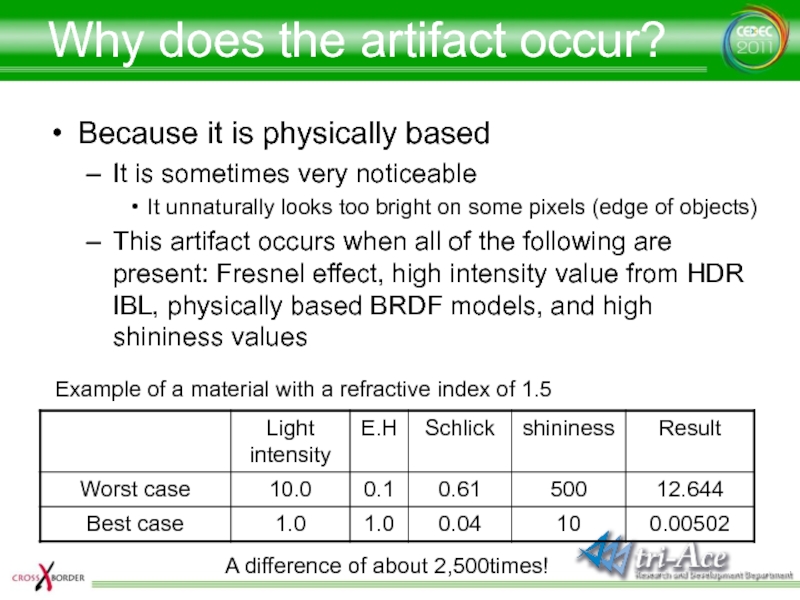

- 48. Why does the artifact occur?Because it is

- 49. Multiplying by AO factorIs not enoughEnough for

- 50. Novel Occlusion FactorNeed almost zero for occluded

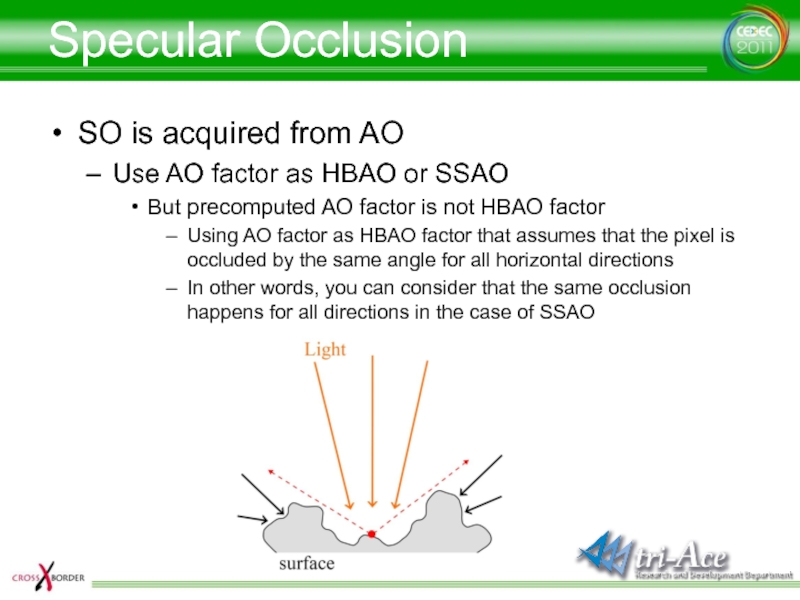

- 51. Specular OcclusionSO is acquired from AOUse AO

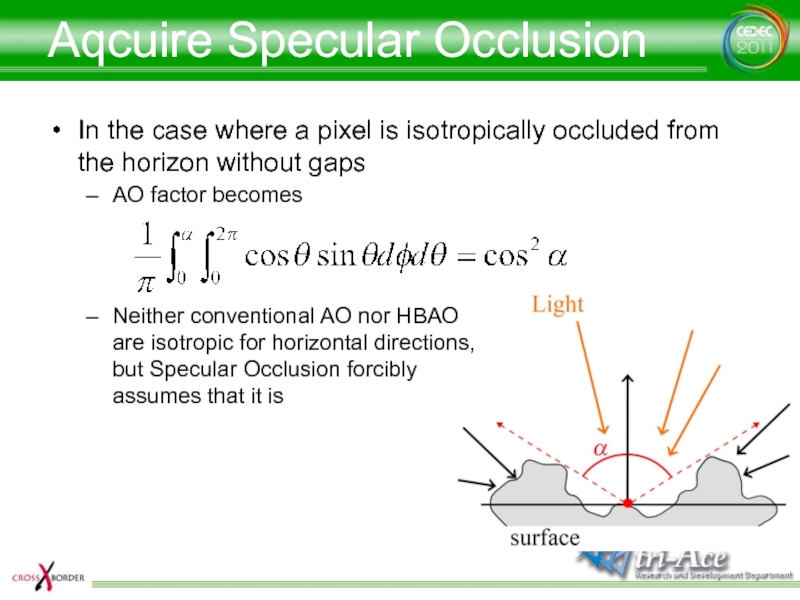

- 52. Aqcuire Specular OcclusionIn the case where a

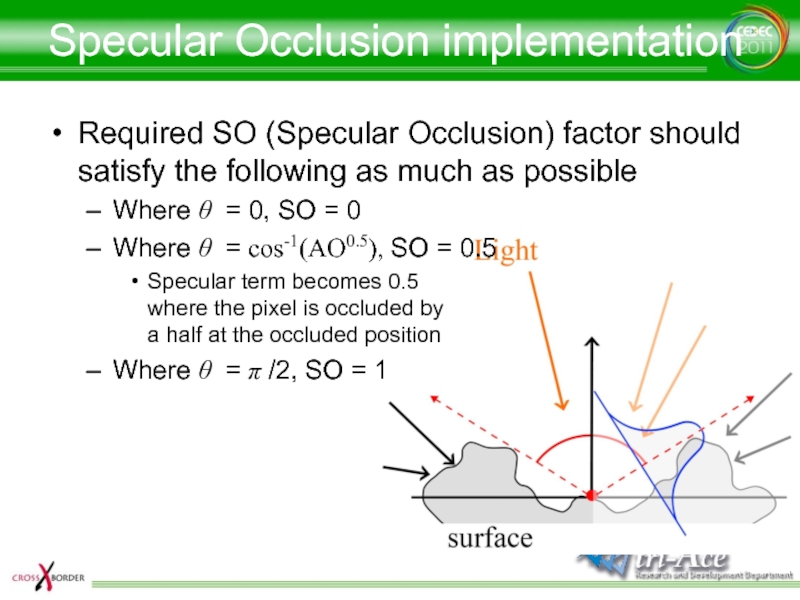

- 53. Specular Occlusion implementationRequired SO (Specular Occlusion) factor

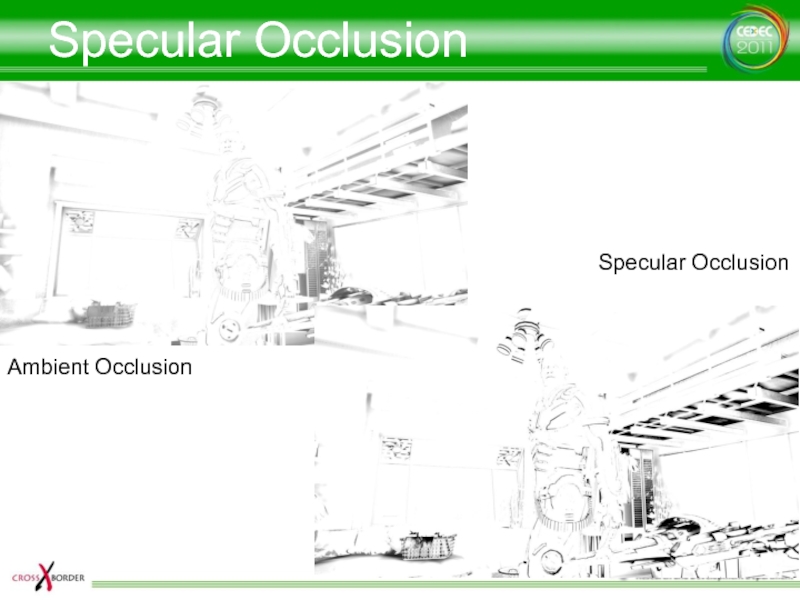

- 54. Specular OcclusionAmbient OcclusionSpecular Occlusion

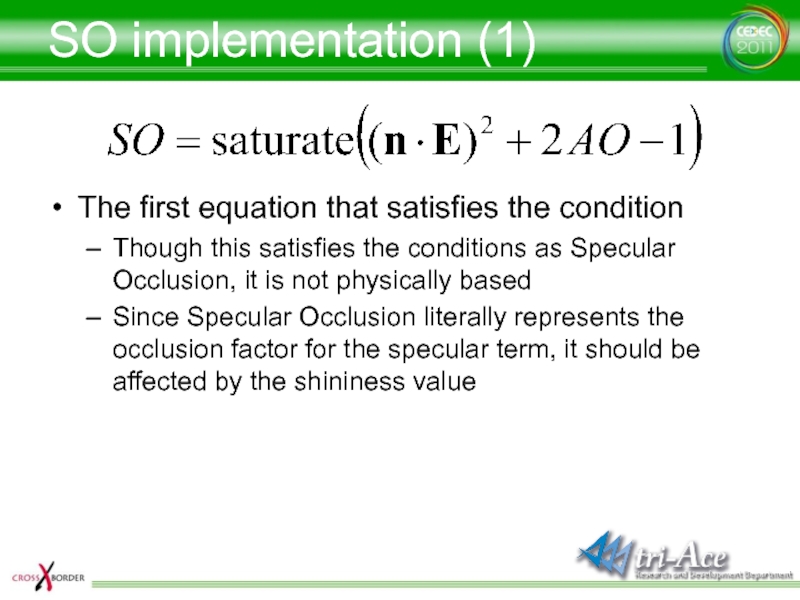

- 55. SO implementation (1)The first equation that satisfies

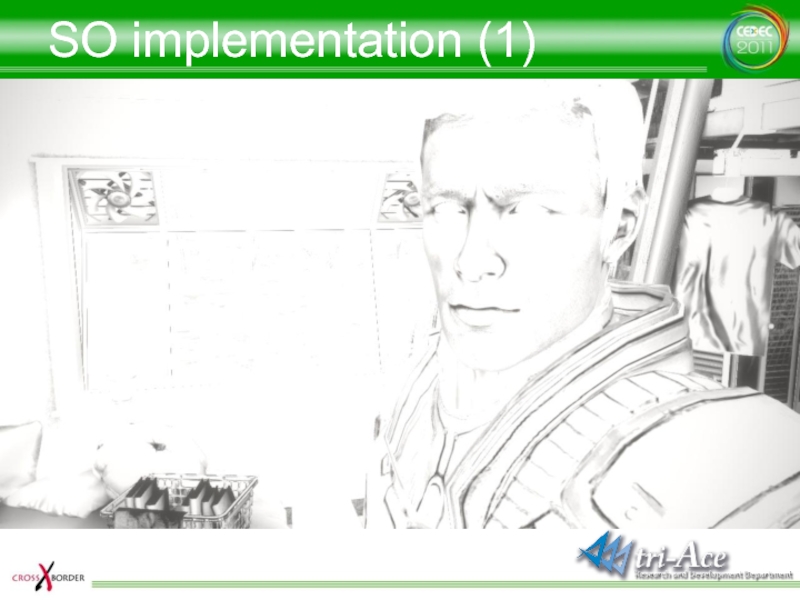

- 56. SO implementation (1)

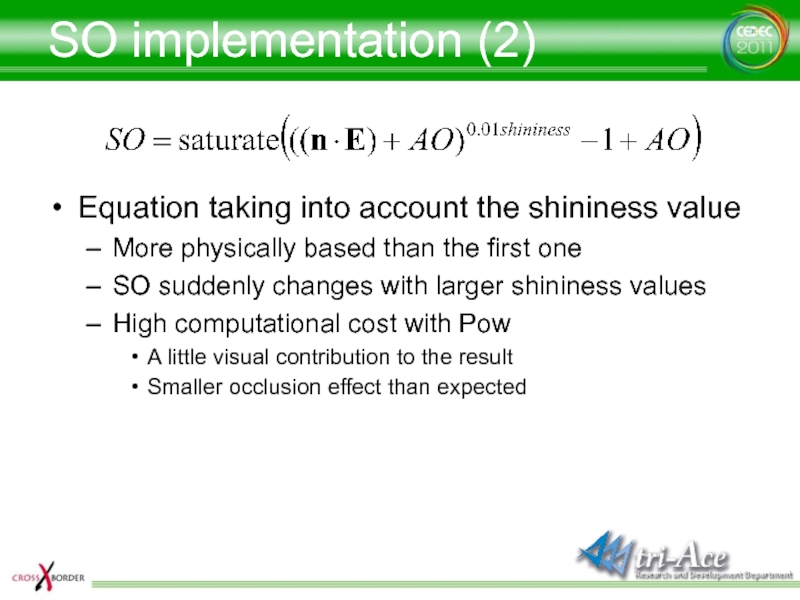

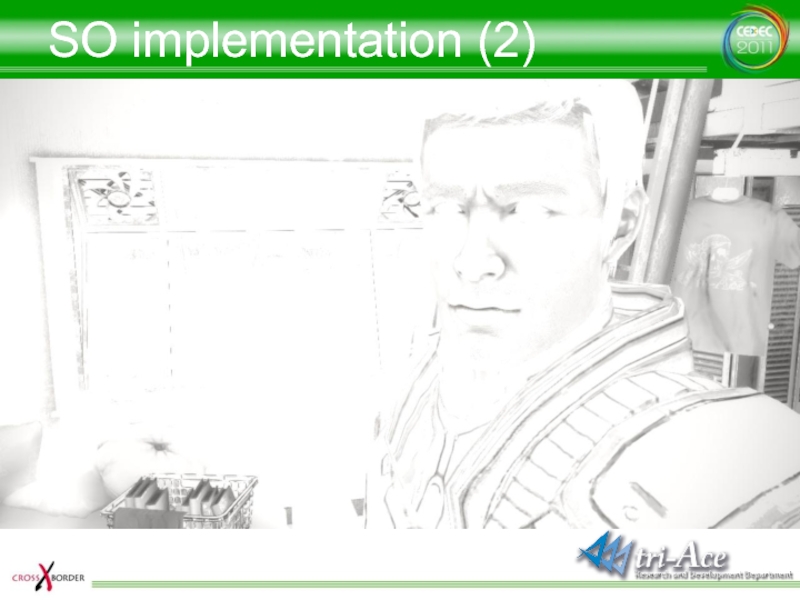

- 57. SO implementation (2)Equation taking into account the

- 58. SO implementation (2)

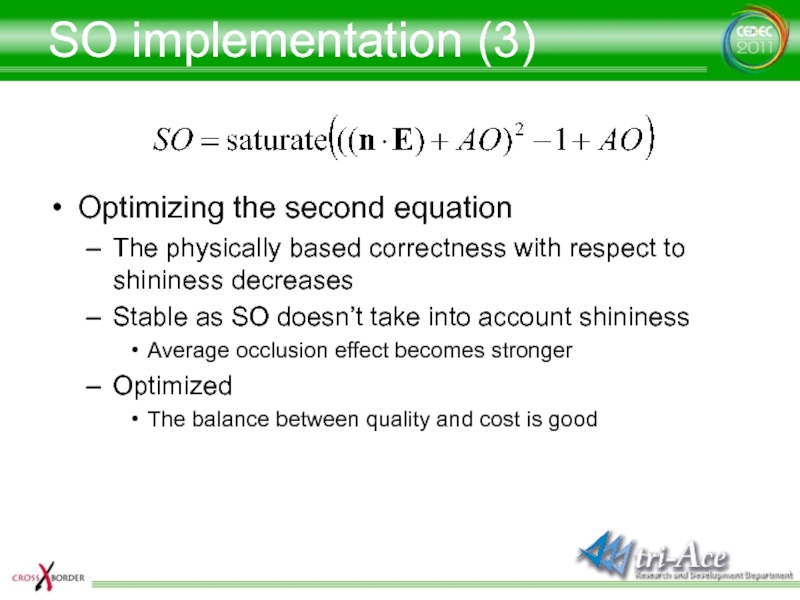

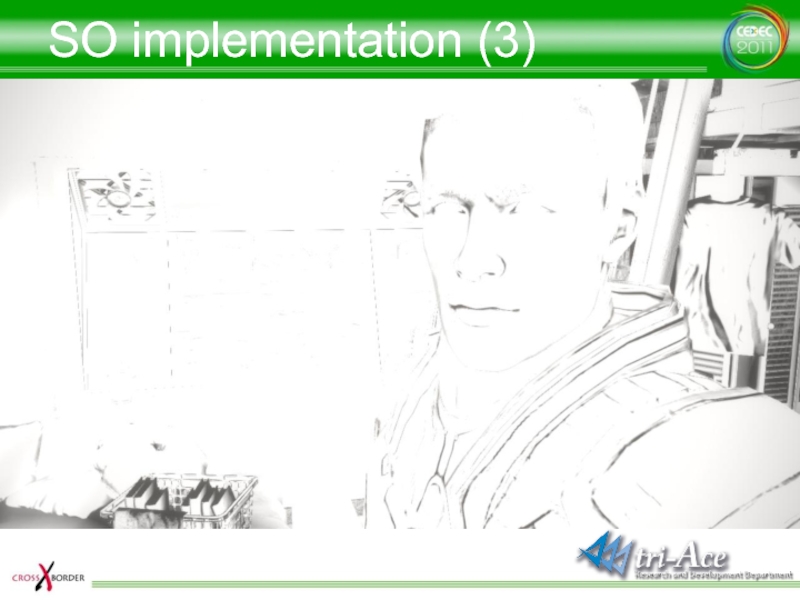

- 59. SO implementation (3)Optimizing the second equationThe physically

- 60. SO implementation (3)

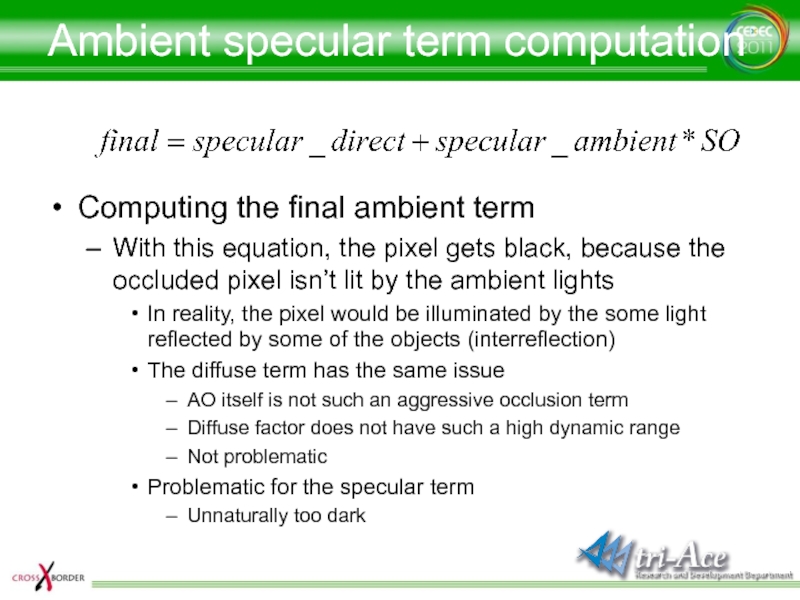

- 61. Ambient specular term computationComputing the final ambient

- 62. Ambient specular term computation

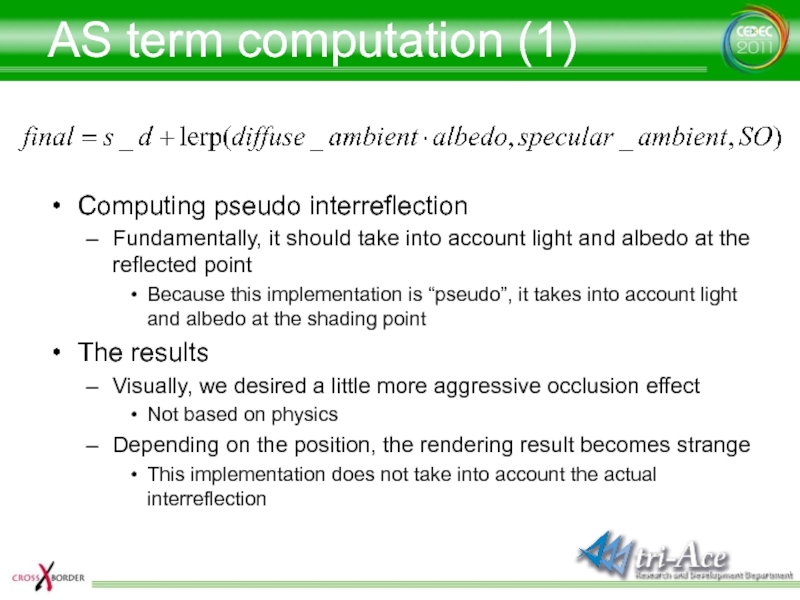

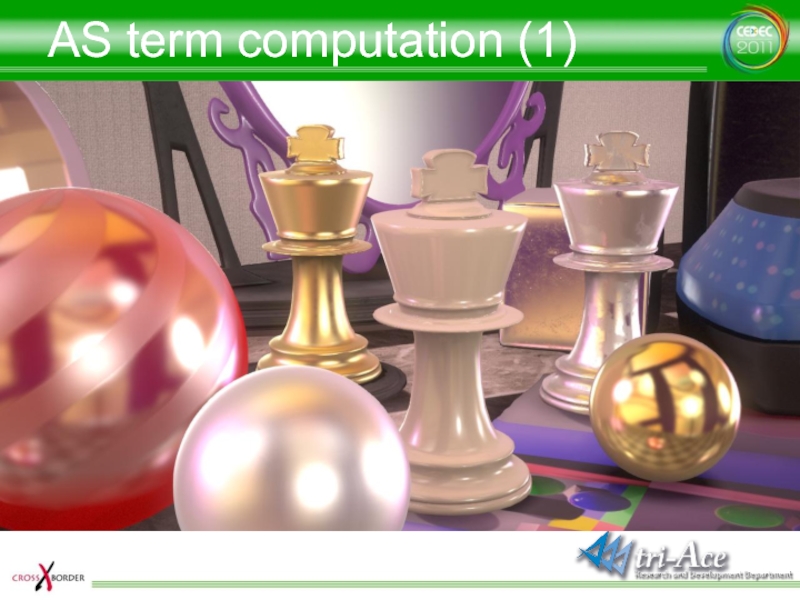

- 63. AS term computation (1)Computing pseudo interreflectionFundamentally, it

- 64. AS term computation (1)

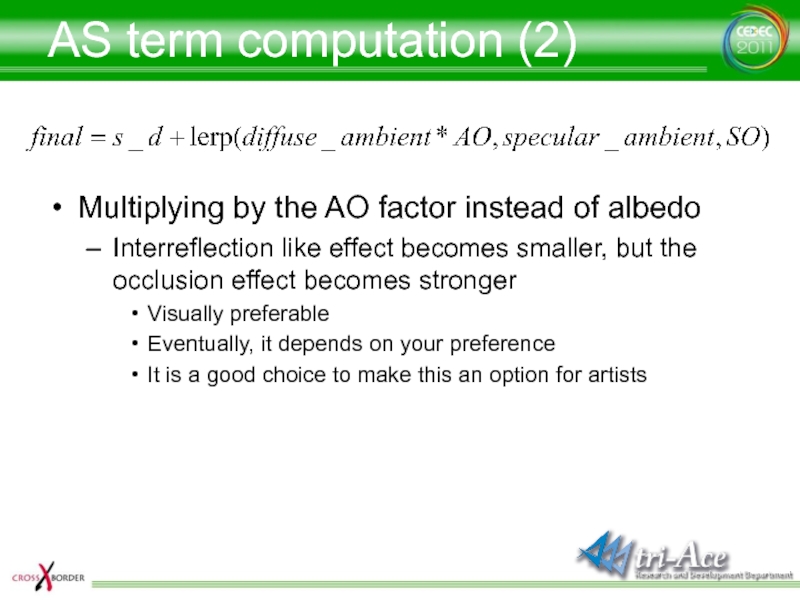

- 65. AS term computation (2)Multiplying by the AO

- 66. AS term computation (2)

- 67. AS term computation (3)Again, the AO factor

- 68. AS term computation (3)

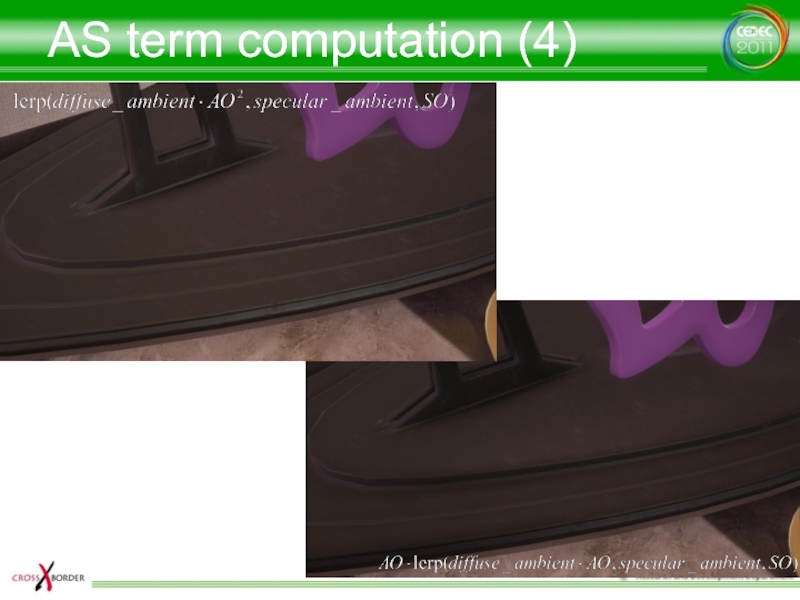

- 69. AS term computation (4)The secondary AO factor

- 70. AS term computation (4)

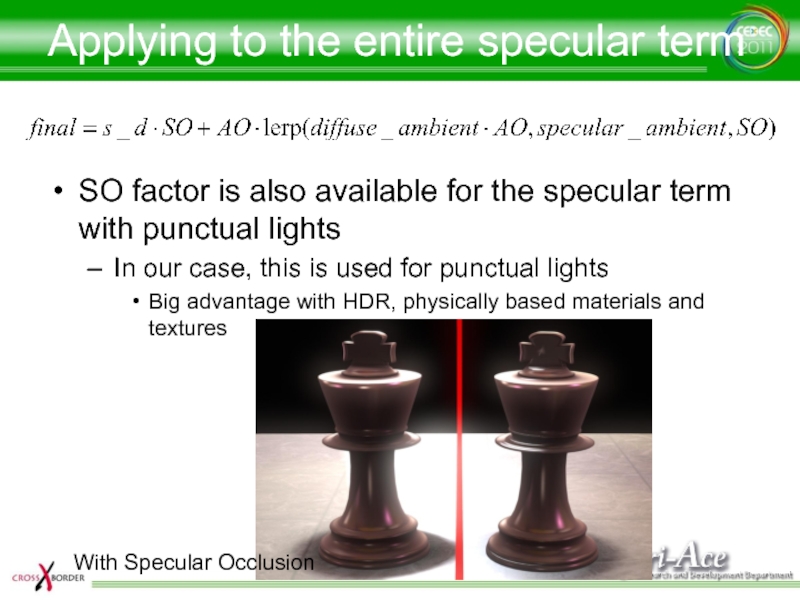

- 71. Applying to the entire specular termSO factor

- 72. W/o Specular Occlusion (Only AO)

- 73. With Specular Occlusion

- 74. IBL performancems @ 1280x720

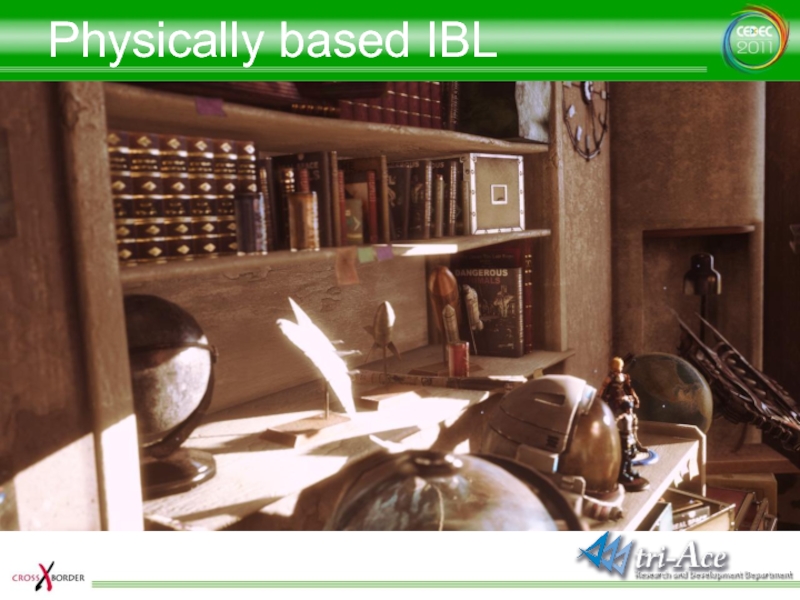

- 75. Physically based IBL

- 76. Physically based IBL

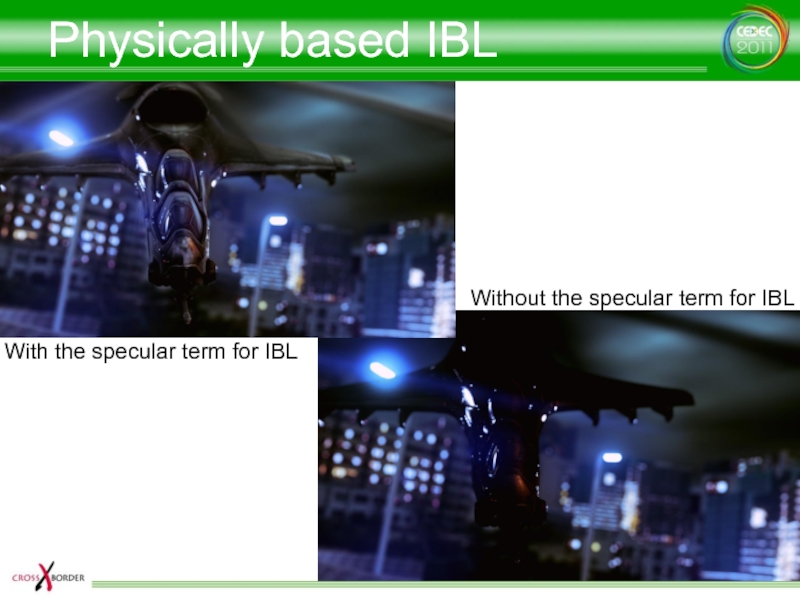

- 77. Physically based IBLWith the specular term for IBLWithout the specular term for IBL

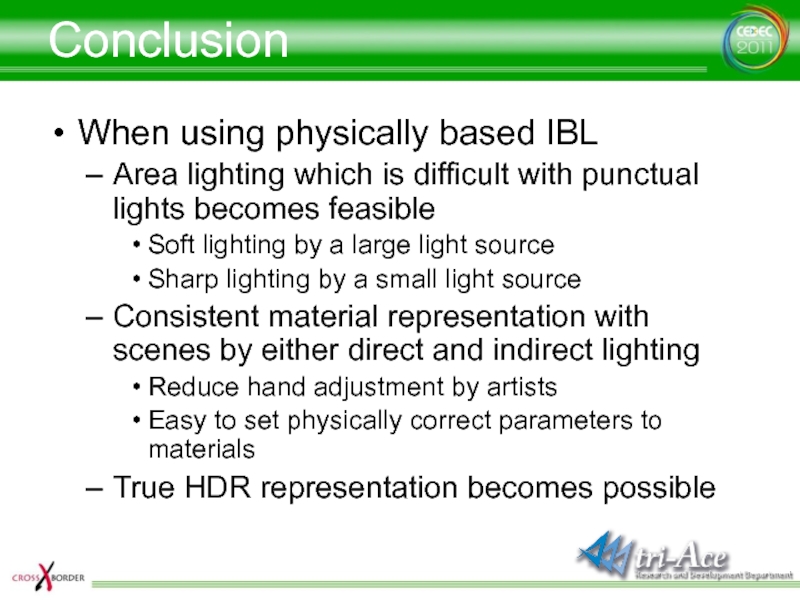

- 78. ConclusionWhen using physically based IBLArea lighting which

- 79. AcknowledgementsR&D department, tri-Ace, Inc.Tatsuya ShojiElliott Davis

- 80. Questions?http://research.tri-ace.com

- 81. Скачать презентанцию

Слайды и текст этой презентации

Слайд 2Image Based Lighting (IBL)

Lighting that uses a texture (an image)

as light source

a broad sense, environment mapping is one of techniques of Image Based LightingСлайд 3Physically Based IBL

Ad-hoc IBL vs. Physically-based IBL

Has the same differences

and similarities between ad-hoc rendering and physically based rendering

Ad-hoc

renderingEach process needed for rendering is implemented one by one, ad-hoc

Physically Based Rendering

The entire renderer is designed and built based on physical premises such as the Rendering Equation and etc.

Слайд 4Physically Based IBL advantages

Guarantees a rendering result that is close

to shading under punctual light sources

Materials in a scene dominated

by direct lighting and indirect lighting seem the sameConsistency is preserved through different lighting

Artists spend less time tweaking parameters

Even in a scene dominated by indirect lighting, materials look realistic

No need to use an environment map for glossy objects

Just add an IBL light source

Слайд 5PBIBL implementation

Implementing IBL as an approximation of the rendering equation

Physically

Based Image Based Lighting is one of possible examples to

reasonably implement physically based renderingСлайд 7Decompose integral

Irradiance Environment Map (IEM)

Pre-filtered Radiance Environment Map

(PFREM)

AmbientBRDF Volume

Texture

Слайд 8Implement Ambient BRDF

Precompute this equation off line and store result

to a volume texture

U – Dot product of eye vector

(w) and normal (n)V – shininess

W – F0

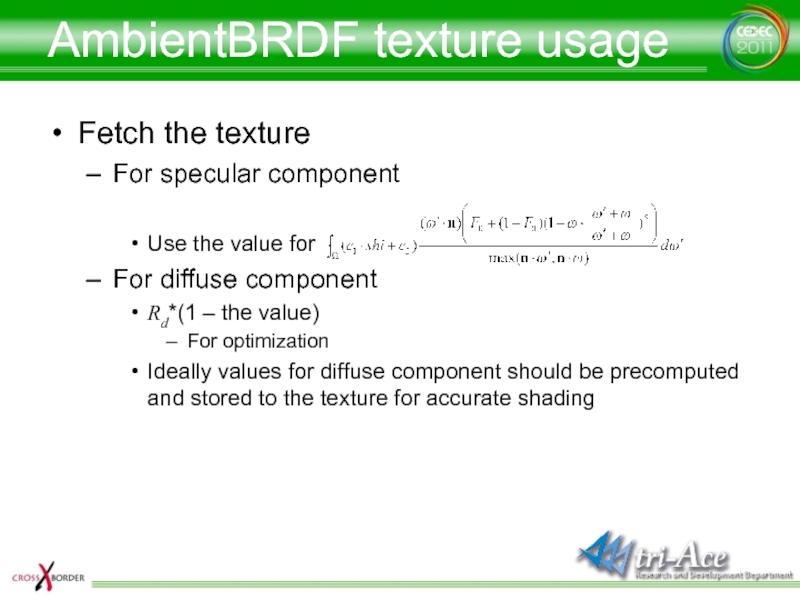

Слайд 9AmbientBRDF texture usage

Fetch the texture

For specular component

Use the value for

For

diffuse component

Rd*(1 – the value)

For optimization

Ideally values for diffuse component

should be precomputed and stored to the texture for accurate shadingСлайд 11Generate textures

Use AMD CubeMapGen?

It can't be used for real-time processing

on multi-platform, because it is released as a tool /

libraryСлайд 12Generate textures

Use AMD CubeMapGen?

It can't be used for real-time processing

on multi-platform, because it is released as a tool /

libraryEven so, the quality is not perfect and there is room for improvement

But it has become open-source

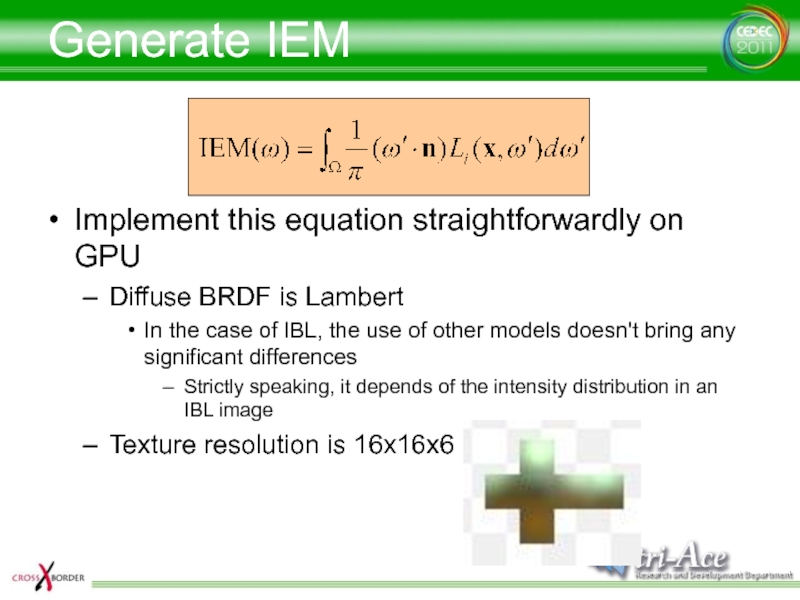

Слайд 13Generate IEM

Implement this equation straightforwardly on GPU

Diffuse BRDF is Lambert

In

the case of IBL, the use of other models doesn't

bring any significant differencesStrictly speaking, it depends of the intensity distribution in an IBL image

Texture resolution is 16x16x6

Слайд 14Generate IEM (2)

Using a radiance map reduced to 8x8x6

Store accurately

precomputed Dw to the texture using spherical quadrilateral

AMD CubeMapGen uses

approximated DwNormalizing coefficient is also stored in the texture

Fp16 format

8x8x5 = 320tap filter on GPU

Xbox360 0.5ms

PS3 2.0ms

Would be better on SPU

Слайд 15Optimize diffuse term

Using SH lighting instead of IEM for a

high performance configuration

Our engine already implements SH lighting

No extra GPU

costCompute the coefficients from 6 texels at the center in each face

Irradiance Map

Spherical Harmonics

Слайд 16Generate REM

Pre-filtered Mipmapped Environment Map

Compute the equation with different shininess

values and store results to each mipmapped texture

Blinn based NDF?

Approximated

with PhongThis is a compromise solution because the specular highlight shape changes due to different microfacet models

Only fitting the size difference of NDFs using shininess

Слайд 18Generate PMREM (1)

Box-filter kernel filtering

Simply use bilinear filtering to generate

mipmaps

LOD values are set according to shininess

Quality is quite low

Not

even an approximationUse as a fastest profile for dynamic PMREM generation

Слайд 20Generate PMREM (2)

Gaussian kernel filtering

Apply 2D Gaussian blur to each

face

Not physically based

As the blur radius increases, visual artifacts from

error in Dw become noticeableThe cube map boundary problem is noticeable

Even using overlapping (described later) for slow gradation generated by the blur process, since filtering isn’t performed over edges, banding is perceived on the edges when colors are changed rapidly

Use as the second fastest profile for dynamic PMREM generation

Слайд 22Generate PMREM(3)

Spherical Phong kernel filtering

The shininess values are converted using

the fitting function

The cube map boundary problem still exists

We expected

to solve it before the implementationThe reason is that, since the centers of adjacent pixels across the edges are not matched, the filtered colors are also not matched

Слайд 24Phong kernel implementation(GPU)

Brute force implementation similar to irradiance map generation

In

the final implementation, a face is subdivided into 9 rectangles

for texture fetch reductionFaster by 50%

9x6=54 shaders are used for each mip level

Subdivision is not used below 16x16

It becomes ALU bound as texture cache efficiently works for smaller textures

Слайд 25Phong kernel implementation(CPU)

Offline generation by the tool for static IBL

SH

coefficients and PMREM are automatically generated during scene export

For performance,

64x64x6 PMREM is only supported for static IBLBrute force implementation

All level mipmaps are generated from the top level texture at the same time

Core2 8 hardware threads @ 2.8GHz

64x64x6 : 5.6s

32x32x6 : 0.5s

SSE & multithread

Слайд 26Generate PFREM (4)

Poisson kernel filtering

Implemented a faster version of Phong

kernel filtering

Apply about 160tap filter with one lower level mipmap

textureQuality is compromised even with this process

Many taps are needed for desired quality

Didn’t work as optimization

Didn’t work well with Overlapping process

Not used because of bad quality and performance

Слайд 27Comparisons

Box kernel filter

Gaussian kernel filter

Spherical Phong kernel filter

Spherical Phong kernel

filter

Слайд 28Mipmap LOD

Mipmap LOD parameter is calculated for generated PMREM

Select the

mip level according to shininess

Using texCUBElod() for each pixel

a is

calculated according to the texture size and shininessWith trilinear filtering

Each shininess value corresponding to each mip level is calculated by fitting

Fitted for both Box Filter Kernel and Phong Filter Kernel

Слайд 29Edge overlapping

Need to solve the cubemap boundary problem

No bilinear filtering

is applied on the cubemap boundaries of each face with

DX9 hardwareProblematic especially for low resolution mipmaps (1x1 or 2x2)

Edge fixup in AMD CubeMapGen

Слайд 30Edge overlapping (1)

Blend adjacent boundaries by 50%

Simplified version of AMD

CubeMapGen’s Edge Fixup

Adjacent texels across the boundaries become the same

colorsIf corners, the colors become the average of adjacent three texel colors

If 1x1, the color becomes the average of all faces

All texels become the same color

Banding is still noticeable because color gradation velocity varies

Слайд 32Edge overlapping (2)

Blend multiple texels

For the next step, blend 2

texels

In order to reduce gradation velocity variation, blend 2 texels

by 1/4 and 3/4 ratioSame approach as CubeMapGen

However, banding is still noticeable in the case where gradation acceleration drastically varies

As the area where banding is noticeable increases, the impression gets worse

Because the blurred area increases, the accuracy of the integration decreases

Worse rendering quality

Слайд 33Edge overlapping (3)

4 texel blend?

More blends don’t make sense according

to our research

4 texel blending in CubeMapGen is not so

high qualityMoreover, the precision as a signal decreses

Слайд 34Bent Phong filter kernel

This algorithm blends normals instead of colors

Similar

to the difference between Gouraud Shading and Phong Shading

The normal

from the center of the cube map through the center of the texel is bent by an offset angle The offset angle is interpolated from zero at the center of the face to a target angle at the edge

The target angle is the angle between the two normals of adjacent faces’ edge texels

The result from just the above steps was improved, but still not perfect

Then, using only 50% of target angle gave a much better result

In the final implementation, the target angle is additionally modified based on the blur radius

Large radius : 100% of target angle used

Small radius : 50% used

Since optimal values for the target angle are image dependant, adjust the values by visual adjustment instead of mathematical fitting

Слайд 38Problems with large shininess

In practice with IBL, materials still look

glossy even with shininess of 1,000 or 2,000

For mirror like

materials, shininess of ten thousands is preferredDifficult to have high enough resolution mipmap textures, because of memory and performance issues

Adding the mirror reflection option

When this functionality is turned on, the original high resolution texture is automatically chosen

Слайд 39IBL Blending

Blending is necessary when using multiple Image Based Lights

Implemented

blending between an SH light and an IBL

Popping was annoying

when the blend factor cross 50%Not practical

Blending by fetching Radiance Map twice

Diffuse term is blended with SH

For optimization, this process is performed only for the specified attenuation zone

Switching shader

Слайд 41IBL Offset

A little tweak for a local reflection problem with

IBL

The usual method

Reflection vector is modified according to the virtual

IBL positionc is computed from the IBL size, the object size and another coefficient which is adjusted by hand

With IBL Offset

Слайд 42Matching IBL with point light

In the case where area lighting

becomes practical with IBL, punctual lights becomes problematic

When adjusting specular

for punctual lights, artists tend to set smaller (blurrier) shininess values than physically based valuesBut it is too blurry for IBL

When adjusted for IBLs, it is too sharp for punctual lights

No way for artists to adjust specular without matching

Слайд 43Shininess hack

Not mathematical matching, but matching the result from punctual

lights to the result from IBL

Anyhow, this is a hack

The

coefficient can’t be precisely adjustedDepends on the shape of the object lit

Depends on the size of the light source

Shininess value is compensated by the lighting attenuation factor

In the case of distant light source, shininess value tends to be the original shininess value

In the case of close light source, shininess values tends to be smaller than the original value

Слайд 46HDR IBL Artifact

The rendering result looks unnatural when the high

intensity light that should be occluded is coming from grazing

anglesGenerally multiply by the ambient occlusion factor

Enough for LDR IBL

The artifact is noticeable when HDR IBL has a big difference of intensities, just like the real world

Multiplying by the ambient occlusion factor isn’t enough

Слайд 48Why does the artifact occur?

Because it is physically based

It is

sometimes very noticeable

It unnaturally looks too bright on some pixels

(edge of objects)This artifact occurs when all of the following are present: Fresnel effect, high intensity value from HDR IBL, physically based BRDF models, and high shininess values

Example of a material with a refractive index of 1.5

A difference of about 2,500times!

Слайд 49Multiplying by AO factor

Is not enough

Enough for LDR IBL and

non physically based

Unnoticeable

Not enough for HDR IBL and physically based

at allIf an AO factor is 0.1,

12.64*0.1=1.264 with the example

Still higher than 1.0

Need a more aggressive occlusion factor

Слайд 50Novel Occlusion Factor

Need almost zero for occluded cases

Not enough with

0.3 or 0.1 for HDR

Need 0.01 or less

Very small values

for not occluded area are problematicNeed to compute an occlusion term designed for the specular component

High-order SH?

No more extra parameters!

Слайд 51Specular Occlusion

SO is acquired from AO

Use AO factor as HBAO

or SSAO

But precomputed AO factor is not HBAO factor

Using AO

factor as HBAO factor that assumes that the pixel is occluded by the same angle for all horizontal directionsIn other words, you can consider that the same occlusion happens for all directions in the case of SSAO

Слайд 52Aqcuire Specular Occlusion

In the case where a pixel is isotropically

occluded from the horizon without gaps

AO factor becomes

Neither conventional AO

nor HBAO

are isotropic for horizontal directions,

but Specular Occlusion forcibly

assumes that it is

Слайд 53Specular Occlusion implementation

Required SO (Specular Occlusion) factor should satisfy the

following as much as possible

Where q = 0, SO =

0Where q = cos-1(AO0.5), SO = 0.5

Specular term becomes 0.5 where the pixel is occluded by a half at the occluded position

Where q = p /2, SO = 1

Слайд 55SO implementation (1)

The first equation that satisfies the condition

Though this

satisfies the conditions as Specular Occlusion, it is not physically

basedSince Specular Occlusion literally represents the occlusion factor for the specular term, it should be affected by the shininess value

Слайд 57SO implementation (2)

Equation taking into account the shininess value

More physically

based than the first one

SO suddenly changes with larger shininess

valuesHigh computational cost with Pow

A little visual contribution to the result

Smaller occlusion effect than expected

Слайд 59SO implementation (3)

Optimizing the second equation

The physically based correctness with

respect to shininess decreases

Stable as SO doesn’t take into account

shininessAverage occlusion effect becomes stronger

Optimized

The balance between quality and cost is good

Слайд 61Ambient specular term computation

Computing the final ambient term

With this equation,

the pixel gets black, because the occluded pixel isn’t lit

by the ambient lightsIn reality, the pixel would be illuminated by the some light reflected by some of the objects (interreflection)

The diffuse term has the same issue

AO itself is not such an aggressive occlusion term

Diffuse factor does not have such a high dynamic range

Not problematic

Problematic for the specular term

Unnaturally too dark

Слайд 63AS term computation (1)

Computing pseudo interreflection

Fundamentally, it should take into

account light and albedo at the reflected point

Because this implementation

is “pseudo”, it takes into account light and albedo at the shading pointThe results

Visually, we desired a little more aggressive occlusion effect

Not based on physics

Depending on the position, the rendering result becomes strange

This implementation does not take into account the actual interreflection

Слайд 65AS term computation (2)

Multiplying by the AO factor instead of

albedo

Interreflection like effect becomes smaller, but the occlusion effect becomes

strongerVisually preferable

Eventually, it depends on your preference

It is a good choice to make this an option for artists

Слайд 67AS term computation (3)

Again, the AO factor is multiplied by

the specular term

Makes the specular effect for ambient lighting robust

Not

based on physicsThe SO factor itself approximates the approximation

Relatively adjusted to conservative result

It also depends on your preference

Слайд 69AS term computation (4)

The secondary AO factor is only multiplied

by the diffuse term

Still your preference

This term is optional according

to your preferenceNot physical reason, but artistic direction

Слайд 71Applying to the entire specular term

SO factor is also available

for the specular term with punctual lights

In our case, this

is used for punctual lightsBig advantage with HDR, physically based materials and textures

With Specular Occlusion

Слайд 78Conclusion

When using physically based IBL

Area lighting which is difficult with

punctual lights becomes feasible

Soft lighting by a large light

sourceSharp lighting by a small light source

Consistent material representation with scenes by either direct and indirect lighting

Reduce hand adjustment by artists

Easy to set physically correct parameters to materials

True HDR representation becomes possible